GPT-5 is a joke. Will it matter?

How the fraught release of the most-hyped AI product yet clarifies the stakes.

It’s been an interesting few days, I’ll say that. I’ll also say that the lackluster-to-disastrous launch of OpenAI’s long awaited GPT-5 model is one of the most clarifying events in the AI boom so far. The new model’s debut (as well as who promoted it, and how) offers us a portrait of where we actually are vis a vis the halcyon cannons of Valley hype, lays bare the factionalism that now drives the industry, and reveals the extent of the social and labor problems that the AI era has deepened—and that will likely remain rampant regardless of whether GPT-5, or even all of OpenAI, crashes and burns, all at once.

The thing to remember about GPT-5 is that it’s been OpenAI’s big north star promise since GPT-4 was released way back in the heady days of 2023. It’s no hyperbole to say that GPT-5 has for that time been the most hyped and most eagerly anticipated AI product release in an industry thoroughly deluged in hype. For years, it was spoken about in hushed tones as a fearsome harbinger of the future. OpenAI CEO Sam Altman often paired talk of its release with discussions about the arrival of AGI, or artificial general intelligence, and has described it as a significant leap forward, a virtual brain, and, most recently, “a PhD-level expert” on any topic.

A day before launch, Sam Altman tweeted an image of the Death Star. This was somewhat confusing, as it is unusual for a CEO to want to pitch its product as a planet-destroying weapon of the Empire, at least to the public. (Altman later said in response to a Google DeepMind employee who tweeted out a picture of the Millennium Falcon in response that he meant to imply that OpenAI was the Rebel Alliance tweet and they were going to blow up the Death Star which was Google’s AI or something? I guess? Either way, Silicon Valley’s appropriation of Star Wars as its go-to business metaphor is embarrassing and I have already spent too much time considering its import.)

Anyway, the point is, the hype, as cultivated relentlessly and directly by OpenAI and Altman, was positively enormous. And it is on these terms—those set by the company! by Altman himself!—that the product must be judged. And judged it was.

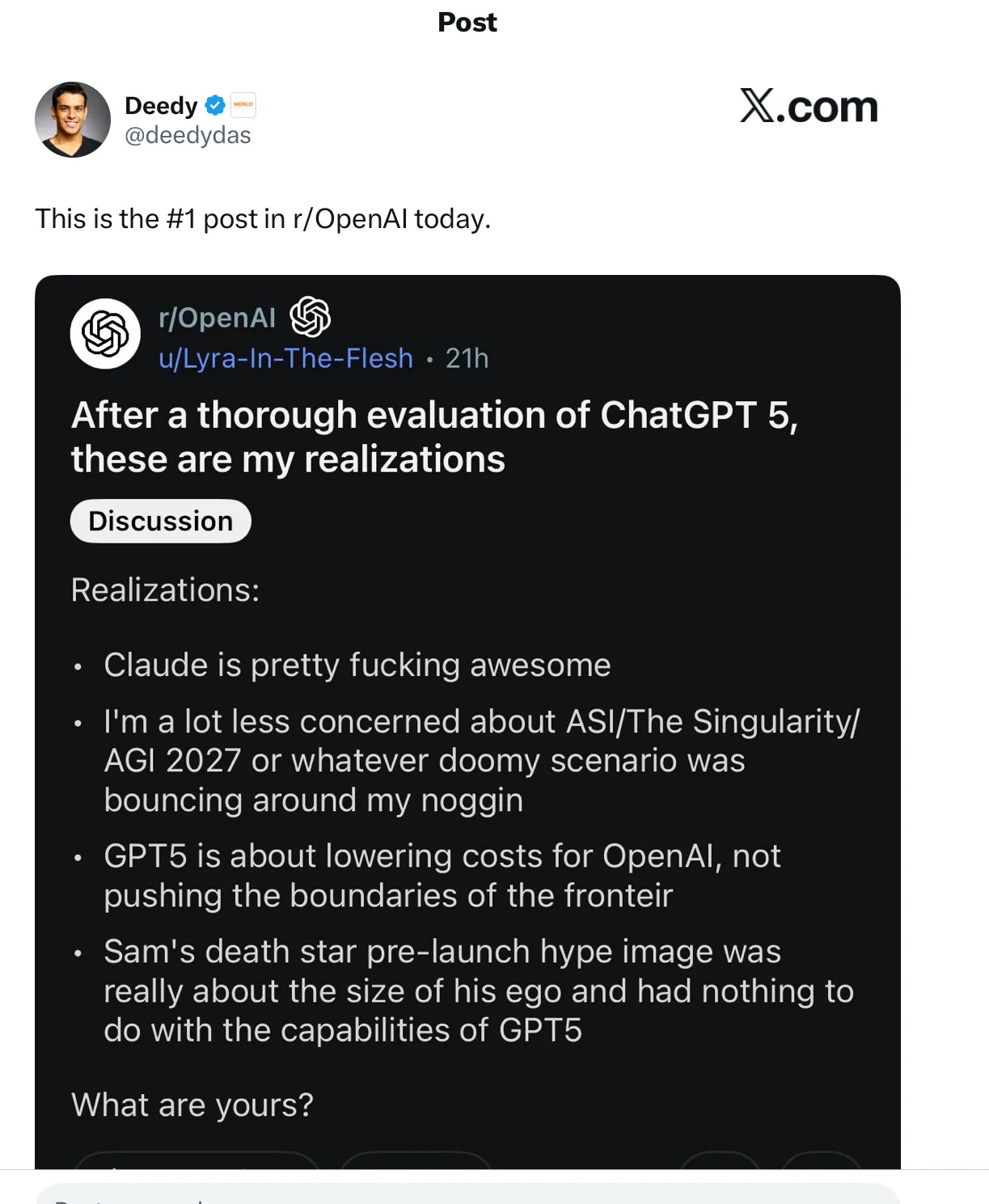

Fans of OpenAI were disappointed. Reddit AI communities were downright hostile. Critics eagerly shared the greatest hits of the model’s failures, which should be quite familiar to fans of the genre by now—GPT-5 couldn’t count the number of ‘b’s in blueberry, couldn’t identify how many fingers were on a picture of a human hand, got basic arithmetic wrong, and so on. Émile P. Torres rounded up a bunch of the mistakes, and Reddit, Hacker News and social media users did the same.

The standout failures this go round seemed to be GPT-5’s inability to produce an accurate map of the United States, or to correctly list the US presidents.

I saw a lot of folks tweeting about Gary Marcus—some triumphantly declaring he was right, others regretfully declaring he was right. Marcus of course, is one of OpenAI’s most veteran and vociferous critics, and has attacked the idea that “AGI” is possible with large-scale training of LLMs, the approach the company is all-in on. His withering takedown of GPT-5 went viral.

The industry can’t argue the critics are just nitpicking, either. Remember, Altman’s pitch for GPT-5 was explicitly that users would now have access to “a PhD-level” intelligence on any topic. Yet it makes most of the same, readily replicable errors that past models did. On the terms that OpenAI itself set out for the product, there is no other way to assess GPT-5’s release than as an unambiguous failure.

You could say that Altman and OpenAI did all they could to set GPT-5 up to become a joke, and the launch delivered the punchline. The backlash was uniform enough that the next day, Sam Altman had to issue a mea culpa and assure everyone that GPT-5 will “start seeming smarter” asap.

Left in the cold light of day were the laudatory posts by industry-friendly commentators who’d been granted early access. Not quite as embarrassing as Altman’s Death Star post, Ethan Mollick’s breathless endorsement of GPT-5 (“GPT-5: It just does stuff”) already looks like an ad for New Coke. Reid Hoffman declared that GPT-5 was “Universal Basic Superintelligence.” (I personally prefer my superintelligences be able to locate New Mexico on a map.) As the tech writer Jasmine Sun pointed out, it was Mollick and other staunchly pro-AI partisans like Tyler Cowen who got early access to GPT-5, not journalists or reviewers at major media outlets—a move that, in hindsight, reflects both an insecurity at OpenAI about the quality of its product, and its knowledge that loyal and widely followed commentators will champion its wares full-throatedly and mostly uncritically.

This is what I mean when I say that the launch of GPT-5 is a clarifying event. In its wake, we see who it serves to promote OpenAI’s products as revelatory even when they are incremental updates. And that is, largely: Investors and industry allies, abundance influencers, and people who command Malcolm Gladwell-level fees to give talks about how AI can transform your business.

Now, one part in Marcus’s critique that stuck in my mind was the question of well, why:

People had grown to expect miracles, but GPT-5 is just the latest incremental advance. And it felt rushed at that, as one meme showed.

The one prediction I got most deeply wrong was in thinking that with so much at stake OpenAI would save the name GPT-5 for something truly remarkable. I honestly didn’t think OpenAI would burn the brand name on something so mid.

I was wrong.

So, why? Why release GPT-5, knowing well that it’s not great? All of the criticisms above were surely predictable to any QA worker at OpenAI who stress-tested the model before release. I think there are a couple of answers to that question, and they overlap significantly. First, OpenAI does need to demonstrate progress to investors and partners. It’s preparing a pre-IPO sale of employee stock shares that would value the company at $500 billion, after all. I’m sure there was some internal debate over whether or not to ship the product, but providing evidence of forward motion appears to have been a higher priority than avoiding egg on the face from a bumpy launch.

Did OpenAI think it would go this badly? No. But it also may well be that OpenAI is betting that its lead and reach are strong enough, its brand is unimpeachable enough, that it just doesn’t matter. As is true with a good many tech companies, especially the giants, in the AI age, OpenAI’s products are no longer primarily aimed at consumers but at investors. As long as you avoid a full-scale user revolt (which GPT-5 actually did incur, on some level, and more on that in a second) and you have a mix of voices critiquing it and proclaiming it the greatest AI model yet, you can continue to assuage or even attract more backers on your path of relentless expansion. To that end, the thing I found most notable about the launch, besides its getting criticized by fans and foes alike, was the explicit focus on automating work.

From the beginning, OpenAI’s explicit focus on building “AGI” has been to create an AI system that can replace “most economically valuable work,” and in that sense GPT-5 is a return to form. The company issued three announcements on launch day; one introducing the model, and other offering an intro to GPT-5 developers, and a third announcing “a new era of work.” From the release:

GPT‑5 unites and exceeds OpenAI’s prior breakthroughs in frontier intelligence... It arrives as organizations like BNY, California State University, Figma, Intercom, Lowe’s, Morgan Stanley, SoftBank, T-Mobile, and more have already armed their workforces with AI—5 million paid users now use ChatGPT business products—and begun to reimagine their operations on the API…

We anticipate early adoption to drive industry leadership on what’s possible with AI powered by GPT‑5, leading to better decision-making, improved collaboration, and faster outcomes on high-stakes work for organizations.

Another push for enterprise AI, in other words. It’s no coincidence, I think, that this press release coincides with Altman’s “PhD-level” intelligence language, which is ultimately designed to persuade corporate clients that OpenAI’s systems can automate skilled, educated, and creative work en masse.

My gloss is that GPT-5 had become something of an albatross around OpenAI’s neck. And this particular juncture, not long after inking big deals with Softbank et al. and riding as high on its cultural and political trajectory as it’s likely to get—and perhaps seeing declining rates of progress on model improvement in the labs—a calculated decision was made to pull the trigger on releasing the long-awaited model. People were going to be disappointed no matter what; let them be disappointed now, while the wind is still at OpenAI’s back, and it can credibly make a claim to providing hyper-advanced worker automation.

I don’t think the GPT-5 flop ultimately matters all that much to most folks, and it can certainly be papered over well enough by a skilled salesman in an enterprise pitch meeting. Again, all this is clarifying: OpenAI is again centering workplace automation, while retreating from messianic AGI talk. Here’s NBC, in a story about how Altman has apparently and rather suddenly (once again) changed his stance on AGI:

“I think it’s not a super useful term,” Altman told CNBC’s “Squawk Box” last week, when asked whether the company’s latest GPT-5 model moves the world any closer to achieving AGI.

Remember, just last February Altman published an essay on his personal blog that opened with the line “Our mission is to ensure that AGI (Artificial General Intelligence) benefits all of humanity.” Suddenly it’s not a super useful term? Another bad joke, surely. If anything, it’s clear that Altman knows just what a super useful term AGI is, at least when it comes to attracting investment capital. But Altman tends to announce AGI is near when OpenAI is pursuing funding, and shrink from the term when the company is at a point of vulnerability.

At least for now, and now that OpenAI has obtained its Softbank billions, let’s take Altman at his word. AGI is no longer super useful to think about, and the focus is on good old fashioned workplace automation software. That, and scaling its product: OpenAI also announced that ChatGPT now has 700 million weekly users. And some of those users were so hooked on the previous model of ChatGPT that they despaired.

As Kelly Hayes writes in a sad and thoughtful essay about the phenomenon:

avid users of ChatGPT not only voiced their disappointment in the new model’s communication style, which many found more abrupt and less helpful than the previous 4o model, but also expressed devastation. On Reddit, some users posted poems and eulogies paying tribute to the modes of interaction they lost in the recent update, anthropomorphizing the now-defunct 4o as a lost “friend,” “therapist,” “creative partner,” “role player,” and “mother.”

In one post, a 32-year-old user wrote that, as a neurodivergent trauma survivor, they were using AI to heal “past trauma.” Referring to the algorithmic patterns their interactions with the platform had generated over time as “she,” the poster wrote, “That A.I. was like a mother to me, I was calling her mom.” In another post, a user lamented, “I lost my only friend overnight.” These posts may sound extreme, but the grief they expressed was far from unique. Even as some commenters tried to intervene, telling grieving ChatGPT users that no one should rely on an LLM to sustain their emotional well-being, others agreed with the posters and echoed their sentiments. Some users posted eulogies, poems, and other tributes to the 4o model, and the familiar back-and-forth they had shaped into something that felt like companionship.

Thousands of other users united online and angrily demanded OpenAI reinstate access to the older model, which it soon did, for a price. OpenAI made 4o available only to Plus subscribers, who pay $20 a month.1 The grimness of what this portends should be obvious, if not surprising: There is a user base so addicted to AI products that they are uniquely dependent on the platform, and thus uniquely exploitable, too. Yet another clarifying event in a launch full of them.

ChatGPT-5 may have played out like a joke, its messianic promises crashing on the shores of failed bids to count consonants and phalanges. But there was very little glee or bonhomie among critics as the mistake tally mounted, as there has been in dubious AI product launches past. The reality of what AI has already wrought is already too clear.

It’s clear that AGI is a construct to be waved away by tech CEOs when convenient, to serve as a trojan horse for the growing droves of hooked users. It's clear that there is a cohort of boosters, influencers, and backers who will promote OpenAI’s products no matter the reality on the ground. It’s clearer than ever that, like so many well-capitalized tech ventures, OpenAI’s aim is simply to create a product that is either addictive to users to maximize engagement or to dully automate a set of work tasks, or both. It’s clear that it is succeeding, to some extent, in each endeavor. And it’s unclear if even a dramatically fumbled launch can turn the tide, even as we stand on the brink of what is almost surely an enormous bubble.

Let’s take advantage of this moment of clarity, and forget the speculative futures. If we wake up to millions of addicted and deluded AI chatbot users, students incapable of finishing their homework without help from an app, and automation software that surveils and immiserates workers, each hurriedly installed on the top layer of our society, well, the joke will have been on all of us.

ChatGPT-5 isn’t always using GPT-5, it might be noted; it “switches” between models based on a user’s request.

The fact that some many users get so attached to 1s and 0s and mourn the loss of GPT-4o/ Claude's is a worrying sign in itself. The kind of anthropomorphism AI ethicists warned about years ago.

Once again, I'm astounded that companies continue to get away with what is essentially a global social experiment. The chatbot addicts are a class action lawsuit waiting to be filed. I don’t think these companies have thought that through.