The AI bubble is so big it's propping up the US economy (for now)

Plus how American professors are fighting back against the AI onslaught, a backlash over AI models in Vogue, and more.

Greetings all —

Busy week in BITM land. Got home from some travel only to take off to SF to speak on a panel about AI and work for a CalMatters conference with state lawmakers and labor leaders, and made it back to LA in time for the 404 live event night, where I had the pleasure of bumping into a bunch of BITM readers. I met ambitious students examining the history of tech and labor in the entertainment industry, veteran tech policy campaigners, and some young critical journos. You all are the best. It ALSO makes me think I should throw a BITM event or meetup of some kind one of these days—readers have suggested this before, and I like the idea, I’ve just been so slammed. I digress.

For those interested, I did an interview with TIME Magazine’s Charter project. It was not my most eloquent spot—exhaustion!—but I think some good points came through. Also chatted with indy media/freelancer advocacy outlet Study Hall about AI in journalism.

It was also a busy week in the AI world, though that’s hardly surprising, for reasons pertinent to the news we’re about to discuss. Without further ado, in this issue, we’ll dive into

-Microsoft’s AI-fueled $4 trillion valuation

-AI is driving so much investment it’s like a “private sector stimulus program” papering over losses to tariffs

And this week’s CRITICAL AI REPORT, with

-How America’s professors are organizing to fight the push of AI into higher ed

-The fury over an AI model appearing in Vogue

-Inside the collapse of Builder.AI, the AI company that wasn’t

-Good bloody news: Courts uphold ruling that Google’s Play store and billing system are illegal monopolies

For the full report, and to support my 100% grade A independent journalism, please consider becoming a paid subscriber so, well, I can do more of it. I’m very grateful to every subscriber who shells out their hard-earned bucks—equivalent to a cheap-ish beer on draft or a decent latte a month—to make this all possible. Hammers up.

The AI boom reaches new heights

Last week, Microsoft became the second company, after Nvidia, to reach a $4 trillion valuation.

CNN reports:

Microsoft’s shares (MSFT) jumped nearly 4.5% after the market opened on Thursday, pushing its intraday valuation to $4.01 trillion. The company’s shares have risen roughly 28% since the start of this year.

The milestone comes just a year and a half after Microsoft reached a $3 trillion valuation. The company first cracked the $1 trillion mark in April 2019. It follows Nvidia into the $4 trillion valuation club, which hit the mark earlier this month.

There are so many wild and noteworthy things about this milestone that it’s hard to know when to start.

First, let’s take a second to note the sheer insanity of these numbers. The first company to hit a $1 trillion valuation in the modern era was Apple, in 2018. Now, just seven years later, there are nine $1 trillion+ tech companies, and Google, Amazon, and Meta are soaring past $2 trillion, and now Apple is well past $3 trillion. Much of this expansion of value has occurred in just the last two years, on the back of the AI boom. Nvidia tripled its valuation, becoming the first-ever $4 trillion company in the process, in less than one year.

Second, note the source of Microsoft’s new investor enthusiasm: Like Nvidia, MS seems to be primarily benefitting from the AI boom by selling shovels during the gold rush. While Nvidia cornered the market on chips needed to run AI’s resource-intensive computation, Microsoft is winning by selling cloud compute in bulk. (Notably its biggest client is OpenAI, which is part of a byzantine deal between the two companies, but more on that in a second.) Microsoft’s largest revenue source is now Azure, its cloud compute business. Azure used to be a distant second, behind industry leader Amazon Web Services, but better-than-expected sales there last quarter have driven Microsoft to its new peak.

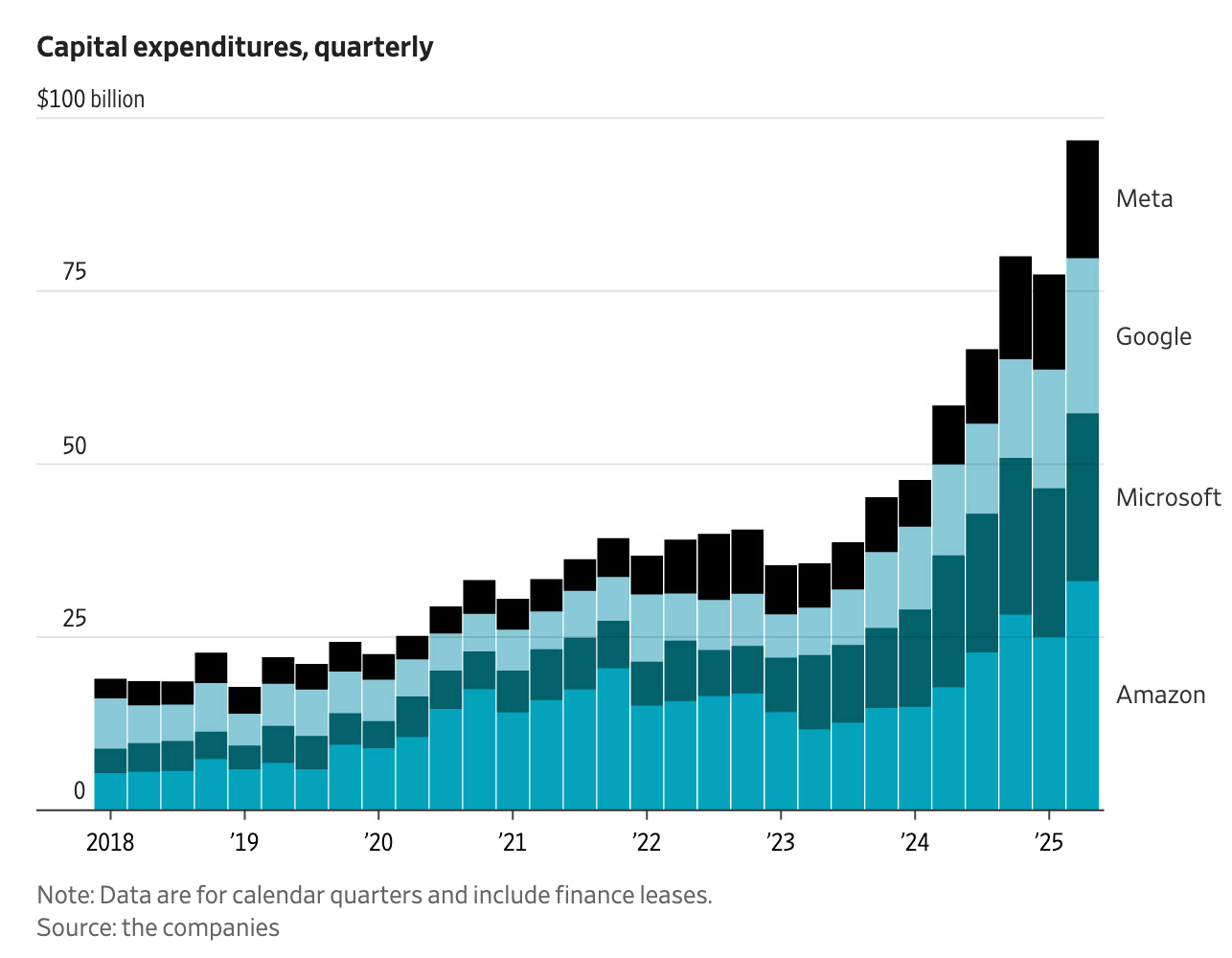

Third, Microsoft and Nvidia are benefitting from bona fide historic levels of investment. Chris Mims sites the analysis of investor and programmer Paul Kedrosky in his most recent column in the Wall Street Journal:

spending on AI infrastructure has already exceeded spending on telecom and internet infrastructure from the dot-com boom—and it’s still growing… one explanation for the U.S. economy’s ongoing strength, despite tariffs, is that spending on IT infrastructure is so big that it’s acting as a sort of private-sector stimulus program.

Capex spending for AI contributed more to growth in the U.S. economy in the past two quarters than all of consumer spending, says Neil Dutta, head of economic research at Renaissance Macro Research, citing data from the Bureau of Economic Analysis.

I’ll just repeat that. Over the last six months, capital expenditures on AI—counting just information processing equipment and software, by the way—added more to the growth of the US economy than all consumer spending combined. You can just pull any of those quotes out—spending on IT for AI is so big it might be making up for economic losses from the tariffs, serving as a private sector stimulus program.

To me, this is just screaming bubble. I’m sure I’m not alone. In fact I know I’m not alone. I’m thinking especially of Ed Zitron’s impassioned and thorough guide to the AI bubble; a rundown of how much money is being poured into and spent on AI vs how much money these products are making, and surprise, the situation as it stands is not sustainable. Worrying signs abound, and not least that so far, the companies benefitting most from AI are those selling the tools to simply build more of it (Nvidia, Microsoft), or who have monopolies through which they can force AI tools onto users en masse with limited repercussions (Google, Meta). Consumers routinely evince negative sentiment towards AI and AI products in polls, outweighing enthusiasm. And meanwhile, what I’d say is the only truly runaway, organically popular AI product category, chatbots, largely remain big money losers due to the resources they take to run.

As such, these massive valuations feel fishy. I asked Ed for his thoughts on Microsoft’s $4 trillion earnings report. He said:

Microsoft broke out Azure revenue for the first time in history, yet has not updated their annualized revenue for AI since January 29 2025. If things were going so well with AI, why are they not providing these numbers? It's because things aren't going well at all, and they're trying to play funny games with numbers to confuse and excite investors.

Also, $10bn+ of that Azure revenue is OpenAI's compute costs, paid at-cost, meaning no profit (and maybe even loss!) for Microsoft.

Look, I’m no prophet, clearly. I’ve predicted that we were probably witnessing the peak of the AI boom *nearly a year ago*, and while I think I was right with regard to genuine consumer and pop cultural interest, obviously the investment and expansion has kept right on flowing. It’s to the point that we’re well past dot com boom levels of investment, and, as Kedrosky points out, approaching railroad-levels of investment, last seen in the days of the robber barons.

I have no idea what’s going to happen next. But if AI investment is so massive that it’s quite actually helping to prop up the US economy in a time of growing stress, what happens if the AI stool does get kicked out from under it all?

There could be a crash that exceeds the dot com bust, at a time when the political situation through which such a crash would be navigated would be nightmarish. There could be a smaller bust, which weeds out the less monopolistic and unprofitable AI companies, or drives them to survive with the help of the tech giants or the ever more AI-friendly US state. Who knows. It does, however, seem increasingly unlikely that there will be no correction at all. (I also should say that I find it implausible any crash will simply wipe out AI as a product category, either; as a surveillance and automation tool, AI is simply too alluring to business, and as a chatbot product, it’s already addicted millions of users. There are fresh challenges here.)

Recognizing from history the possibilities of where this all might lead, the prospect of any serious economic downturn being met with a widespread push of mass automation—paired with a regime overwhelmingly friendly to the tech and business class, and executing a campaign of oppression and prosecution of precarious manual and skilled laborers—well, it should make us all sit up and pay attention.

THE CRITICAL AI REPORT 8/3/2025

How America’s university professors are fighting the onslaught of AI

But take heart! There are people organizing against the wanton encroachment of AI into every pore of society. To wit: There are few sectors that have been as inundated with AI as education. From a deluge of AI-generated student papers to the AI companies hard push to sell administration on new edtech tools, education, and especially higher ed, is one of the front lines of AI.

Frustrated by their lack of a voice in how AI was being deployed in classrooms and beyond, with administrations too willing to greenlight AI deals without their consent, professors and academic workers are officially pushing organizing to push back.

In July, the American Association of University Professors (AAUP), the union that represents faculty and academic workers at colleges across the nation, issued a report, Artificial Intelligence and Academic Professions. The report distill the results a survey of its membership and “calls for the establishment of policies in colleges and universities that prioritize economic security, faculty working conditions, and student learning conditions as advancements in artificial intelligence (AI) technologies accelerate.”

I spoke to Britt Paris, the head of the AAUP's ad hoc Committee on Artificial Intelligence and Academic Professions, about the report and what it means.

BLOOD IN THE MACHINE: What are the report’s key findings?

Britt Paris: This report… found that overall while faculty are often mandated to use specific technologies, 71% say they have no means to have a say or make decisions around whether or how technology is procured, deployed and used at their institutions.

People want to have meaningful power around tech decisions at their institutions. They also want the ability to opt out of technology without risk of punishment. They want better working conditions and are concerned about technology driving down wages, being used for surveillance, and to destroy academic freedom. They are also concerned about their intellectual property being used to amass profit for massive technology companies.

We want to be paid meaningfully for our labor and accommodated appropriately, as we educate young people to become active and knowledgeable participants in democracy, with the ability to form thoughtful relationships with one another and with the environment, and live full happy lives. We have done interviews and other engagements since the survey, and have overwhelmingly found that instead of improving working and learning conditions, administrators are too easily wooed by tech industry hype, and they are quick to deploy technology solutions which are cheaper and far less effective in bringing about positive change in higher education.

Why did you deem it crucial to address the spread of AI in higher education? Do you consider there to be a crisis of AI in higher ed?

We started this work last year, and even then higher education was severely evaluated and under attack, though in a different way than it has been since January 2025. We have needed better policy around technology in higher education for years, at least for 15, when the software-as-a-service model came to be the norm for bringing higher education online. Higher education has always had a problem with handing out massive amounts of money for technical solutions that could have been, and were until 15 years ago, developed and governed in-house, so to speak.

Educational technology, such as learning management systems (LMS) like Canvas, has always allowed these corporate firms to do anything they want with user data— tracking their clicks and activities both within and outside of the LMS, as well as retains the data that comprises course materials posted within these LMS, like syllabi and videos produced by instructors, and students completed assignments. In 2021, Concordia University notably got in hot water because they were repurposing videos in an online class from an instructor who was deceased. And even now, we have heard from members that administrators are floating the idea of creating AI avatars to "teach" in online courses to repurpose course material from people who they lay off.

As AI has been incorporated into educational technology, often without users knowing it, with no announcement from the university, and as universities had, before this year been trading enormous sums of money for AI partnerships, it makes the need to address technology issues in higher ed even more urgent. Arizona State began their partnership with OpenAI and other tech firms in 2024, which has now started sweeping higher education from the University of Michigan, which houses the largest, oldest, and most well curated datasets in higher education to the massive California State University system that is comprised of over 500,000 students.

What are the risks to students?

Students don't like when their instructors use AI for grading or for developing course materials. They find that it "enshittifies" their education, in the words of one survey respondent. Students do want to be challenged, to learn, to develop different perspectives on the world and to hope for a future than is better than what we have at present. They understand that education provides them at least some of the knowledge, skills, and outlook necessary to live a good life. Another survey respondent noted that for students, they were not so concerned with the "academic honesty" discourse around using generative AI for assignments than the "failure to learn", thus the solutions we develop should not be punitive towards students, but rather engage them in solidarity, because it is their learning conditions that are at stake as much as our faculty working conditions are.

What are the solutions and ideas for countermeasures you propose? How are you approaching organizing around AI?

In our report, we focus on developing shared governance collectives comprised of workers—instructors, staff, and students, elected by those workers—to make decisions around technology procurement, deployment, and training. We have a collective bargaining guide for faculty across the country lucky enough to have union contracts, that includes wishlists and sample language that has been effective. We include other types of documents that faculty across the country with no union contract have used in faculty governing bodies like academic senate resolutions, memoranda of understanding and the like.

We suggest that these shared governance collectives would be better suited to decide on the many issues in higher education than the trustees who currently set the policy in institutions, and have, as a result, deeply devalued higher education in favor of the market driven bottom line, and lining their own pockets. We also suggest that there is a vast need to develop people's tech advocacy units to provide counter narratives to tech industry and adjacent consulting firms for state level policymakers, so that policymakers have the knowledge and ability to develop and vote on policy in favor of the people.

Across industries and communities, no one except the very rich who are profiting off of the gutting of what's left of the public good, no one is happy with AI's takeover. AI applications are taking over government services and agencies like the Social Security Administration, and even to write regulation. ICE is using it to incarcerate people. Social media is unusable, most traffic on the internet goes to AI summaries, and not to actual pages. Big tech advocate for AI taking over creative, knowledge, and journalism sectors, which results in more muddying the waters around current and past events, as well as obliterating the personal fulfillment that comes from the act of creation itself, as well as the act of enjoying cultural content.

People are largely unhappy with the way that big tech has rolled out AI. But that dissatisfaction does not change things. AI here, as a tech industry product being rammed down our throats is part of the drive towards immiseration of the masses we have been seeing ramp up since the pandemic – in the minds of the oligarchs, there's no longer a future, so they are making what cash grabs they can so they can build their bunkers to save them while the world they have immiserated burns. Making common cause with people across areas of concern and pushing towards change across multiple avenues is the only way out, we cannot have a technical infrastructure that serves humanity when the roots of it are so rotten.

So we must refuse and cut off what doesn't serve us, and strive to let people be in charge of technology—not oligarchs and not authoritarian governments. We must develop counter narratives to the dominant view of technology, and especially AI, as a unquestionable good. We must provide examples of fighting back, as we have done in the contract language, and will continue to develop and communicate. We must connect the multiple attacks we see at present to corporate ownership of technology and capture of the electoral process. And we have to do this while bringing people in to the big tent to organize at the local level, from the bottom up.

(I have a book coming out in January 2026 about this very topic—not connected to A—but based in Internet infrastructure.)

How does Trump's AI action plan complicate matters here, if at all?

Our work on the AI committee both with the AAUP and in our own activities counters the dominant narrative by providing examples where to push back and how that will hopefully be compelling to ordinary people, and easier to pick up, organize around, and move forward with.

One of the first Executive Orders coming from the second Trump administration—on its second day—established Executive Order (EO) 14179, “Removing Barriers to American Leadership in Artificial Intelligence” that “revokes certain existing AI policies and directives that act as barriers to American AI innovation” and requires that AI expands “free from ideological bias or engineered social agendas” of course, the ideological bias referenced here is taken up and made more explicit in this AI Action Plan unveiled by the Trump administration July 23, as it states it's against "woke AI" and threatens what little Biden area regulation around AI exists. It promises no regulation around AI at the federal level and opens the door for massive data center construction and regulating energy consumption related to data center use. Data centers are crucial for AI function as AI requires a glut of data and processing power to function. This is all great news to the tech oligarchs like Mark Zuckerberg and Sam Altmann who were in the front row of Trump's inauguration speech.

Less attention has been given Department of Education head Linda McMahon's statement around AI in education released on July 23 in conjunction with the Trump AI Action Plan that uses standard language around AI as a necessary good, and that training and responsible AI use is necessary—without detailing what that means—while it ostensibly seeks to extend the same battles against "woke AI".

This and the AI action plan does not necessarily complicate matters for organizing around AI in higher ed, but it could. There is a dearth of framing around anything that would run counter to this standard line around AI as an unquestionable good, with policymakers and boards of trustees, and administrators of universities. The AAUP has signed on to the People's AI Action Plan to provide and begin organizing around a narrative that people, not oligarchs should control technology. We will continue organizing for this end.

The fury over AI-generated fashion models

I have to say, it’s nice and affirming to see that, even two plus years into the AI boom, there’s still a strong reaction to efforts to replace human work and art with AI. This week’s exhibit A: