We're about to find out if Silicon Valley owns Gavin Newsom

A guide to the AI and tech bills that have passed the California legislature, and await the governor's signature — or veto.

Hello friends, fam, and luddites -

Speaking of luddites, I was completely and pleasantly surprised to see the last post blow up. It turns out there is a lot of interest in and support for a Luddite Renaissance, as the organizers of one event described it; for organized protest of big tech, refusal of its toxic products, and for resisting the dominion of Silicon Valley’s AI. That story has officially led more machine breakers (in spirit and/or in practice) to sign up for this newsletter than any besides the launch post. Thanks to everyone who spread the word, and to Rebecca Solnit, who shared the post with her very activated audience. For the record, I’m always happy to use this space to share news of any and all grassroots tech-critical and Luddite events, movements and projects, so send them my way.

Today, we dig into the spate of California bills that could help rein in some of the AI industry’s worst impulses—if they make it past Gavin Newsom’s veto pen. California is such a major economic force that any laws passed here have implications for the entire country, even the world. They help set standards adopted elsewhere, and companies that adjust their products to meet California legal requirements often do so in other markets as well.

As always, I can only do this work thanks to my magnificent paid subscribers. As much as I’d like to be catching up on Alien: Earth I spent this week reading amended AI laws and talking to organizers, authors, and advocates who are hoping their yearlong toil will be enough to overcome the Silicon Valley lobbying machine and get some AI laws on the books. So, if reporting like this has value to you, please consider upgrading to a paid subscription so I can continue to do it. Thanks again to all who read, support, and share this work. OK enough of that. Onwards.

We’ve talked a lot in these pages about the Trump administration’s embrace of AI, Silicon Valley’s lobbying efforts to halt AI regulation, and the GOP’s push to ban state-level AI legislation altogether. All of those roads have led us here, to this point: Where the very laws that big tech and its political allies hoped to strangle in the cradle now sit on Gavin Newsom’s desk in Sacramento.

There’s obviously a lot going on right now, and the mainstage national discourse has been rough to say the least, but let’s not lose sight of what’s happening in California: At the start of 2025’s legislative session, there were some 30 bills designed to rein in AI. Since then, Silicon Valley has waged a well-funded lobbying campaign to kill, gut, delay, and otherwise whittle away at those laws (more on all that in a minute). The legislative session ended on September 13th. Now, the handful of surviving bills that have managed to pass both the Senate and the Assembly await Newsom’s signature—or his veto. He has until October 12th to sign or veto.

These bills are hardly radical. Most are truly straightforward, common sense measures that none but the most diehard libertarians would take issue with. These are laws that, for example, would ensure that an AI system cannot be used to discipline or fire workers1 (the No Robot Bosses Act, SB 7), that would ban algorithmic price fixing schemes (AB 325) that have led to rent increases and the gouging of consumers, and that would require AI companies to submit safety data to the state and not retaliate against whistleblowers.

There’s AB 1340, which technically allows rideshare drivers to form unions and requires Uber and Lyft to share job data with the state, but also slashes the amount of insurance coverage they need to offer and is structured in a way that paves the way for company unions of limited utility to workers. The Leading Ethical AI Development for Kids Act (AB 1064, or LEAD), meanwhile, would ban AI chatbots marketed to kids (good!) and may be the last bill standing that Silicon Valley is legitimately afraid of.

Now, don’t get me wrong, Silicon Valley wants precisely *none* of these bills to pass. But its army of lobbyists has succeeded in defanging many of them, while helping to stall out others, including a good one limiting the ways AI can enable worker surveillance, and another ensuring driverless delivery vehicles have human oversight. Those have been marked as “two-year” bills, meaning that they’ll be taken up again next year.

This is a crucial moment. If even the barest-bones laws can’t pass here right now, it will come down to one reason above all: Gavin Newsom is currently preparing to run for president and he doesn’t want to upset Silicon Valley and its deep-pocketed donors and platform operators. It will show us that, even in supposedly liberal California, Silicon Valley’s iron grip has become nearly unbreakable, and offer a grim omen for future hopes of subjecting Big Tech to anything resembling democracy.

No Robo Bosses Act. (SB 7)

This is a pretty simple and straightforward bill. It stipulates that an employers cannot use an automated decision system (ADS), like AI software, to fire or discipline workers. And yet, Silicon Valley fought it tooth and nail.

“An employer shall not rely solely on an ADS when making a discipline, termination, or deactivation decision,” the bill’s text states as passed, and adds that if an ADS is used in a workplace, a human reviewer must be brought into the loop. Employers must keep 12 months of data on the books if they’re using an ADS or an AI to discipline workers, and those workers can request that data. The law is an effort to curb the rising power and popularity of algorithmic management systems embraced by companies. Earlier versions went further, including safeguards against discrimination as well—these were stripped out over industry outcry.

The bill is one of three that the California Labor Federation backed this year; another, the surveillance proposal, has been turned into a two-year bill. I reached out to the federation’s president, Lorena Gonzalez, for comment.

“It’s a simple question of: Should there be guardrails?” Gonzalez told me. “Look, if you get disciplined, if you get fired, there should be some oversight. It shouldn’t just come from a computer or an app. Bosses should have souls. We shouldn’t be run by computers.” She sighs before adding, “The bar is pretty low for what we’re asking for.”

The Preventing Algorithmic Price Fixing Act. (AB 325)

Another rather obviously sensible law. Hotels, landlords, grocery stores, rideshare companies, increasingly use algorithms that calculate the highest rate a consumer is likely to pay—and many turn to algorithms third party companies operate in the same market. The result is widely understood to be higher rents, goods, and prices for all of us; the result is price gouging.

CalMatters has the rundown on AB 325, which seeks to protect consumers from digital tools that facilitate modern-day price gouging:

Landlords, grocery stores, and tech platforms like Amazon, Airbnb and Instacart can use algorithms to rip you off in a variety of ways. To prevent businesses from charging customers higher costs and make life more affordable, Assembly Bill 325 would prohibit tech platforms from requiring independent businesses to use their pricing recommendations.

TechEquity, the tech labor advocacy group and one of the bill’s backers, explains further:

AB 325 updates California’s antitrust laws to address modern digital tools used for illegal price fixing by making it clear that using digital pricing algorithms to coordinate prices among competitors is just as illegal as traditional price fixing. It also closes court-created loopholes for price-fixing algorithms by making it easier to bring good cases against illegal price fixing.

In essence, the law would instate a ban on “using third-party algorithms to secretly coordinate prices.” As such, landlords, hotels, and the Chamber of Commerce are opposed to the bill, insisting it would drive up costs.

The Leading Ethical AI Development for Kids Act (AB 1064)

Assemblymember Rebecca Bauer-Kahan’s LEAD act has caught fire as a response to the growing number of tragic cases, like Adam Raines’, of chatbot products developed by OpenAI and CharacterAI that have encouraged children to commit self harm or even kill themselves.

The bill, which passed both the CA Senate and the Assembly with bipartisan support, would ban any company or entity with over 1 million users “from making a companion chatbot available to a child unless the companion chatbot is not foreseeably capable of doing certain things that could harm a child, including encouraging the child to engage in self-harm, suicidal ideation, violence, consumption of drugs or alcohol, or disordered eating.”

Pretty reasonable, right? If you’re going to make a chatbot product available to children, you have to ensure that it will not encourage those children to hurt themselves.

Predictably, tech companies, AI firms, and Silicon Valley advocacy groups are absolutely *up in arms* about the bill. They launched an ad campaign, hired new lobbyists just to thwart this single bill, and alleged that by trying to ensure the chatbots tech companies are marketing to children do not instruct them to kill themselves is an affront to innovation. Adam Kovacevich, the head of the Silicon Valley interest group the Chamber of Progress, wrote an op-ed in the San Diego Union Tribune arguing, with a straight face, that this bill must be stopped because it stands to take away AI chatbots from teens who need them.

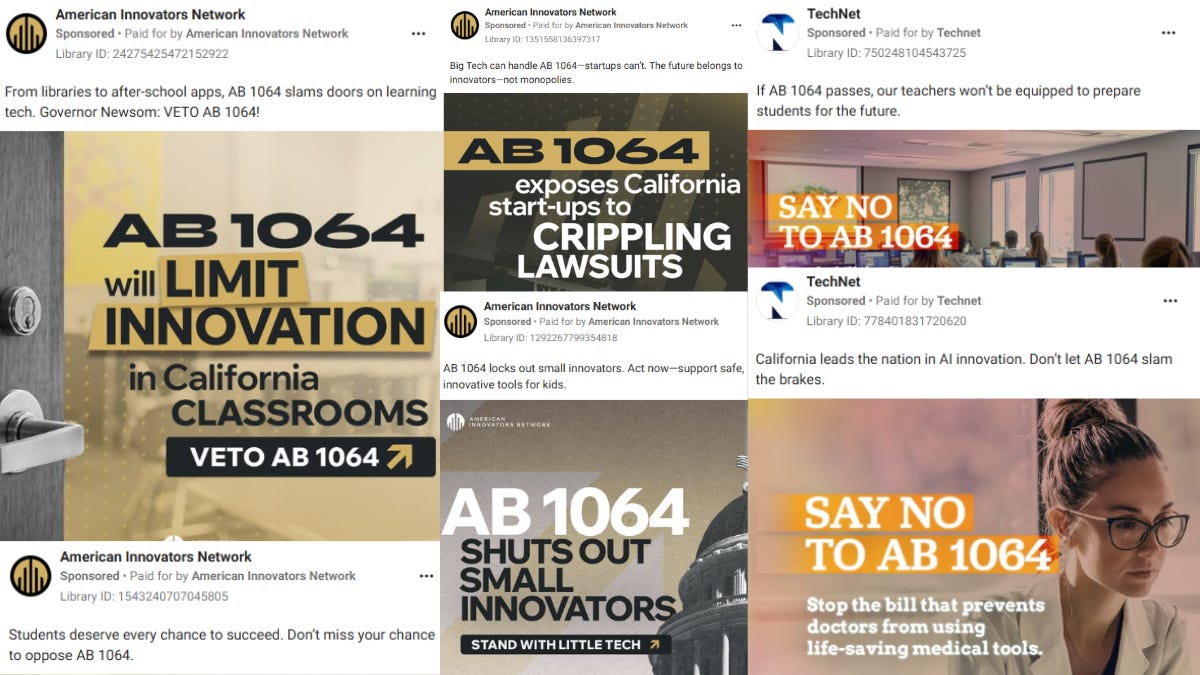

There’s a legitimately disgusting ad campaign out there launched by a front group that calls itself the “American Innovators Network,” which is in truth a lobbying outfit funded by Silicon Valley mainstays like Andreessen Horowitz and Y Combinator. It’s insisting that the law would “limit innovation in California’s classrooms” and hospitals and take away children’s futures.

“Students deserve every chance to succeed,” one Facebook ad gravely intones.

Once again, this is a bill that asks that tech companies that sell products to children ensure those products are not serving them content that encourages them to harm themselves.

“What the bill does is it basically requires that if we’re going to release companion chatbots to children, [that] they are safe by design, that they do not do the most harmful things,” Bauer-Kahan told Tech Policy Press.

That is apparently too much to ask of the AI companies, which actually makes sense. The AI companies know that, given the current limitations of large language models, they can’t easily guarantee their chatbot products won’t continue to generate noxious content that contributes to the psychosis and mental deterioration of children—not without expensive investments in more content moderation or adjustments that might deter user engagement—they just think they should be allowed to continue to market their products to them anyway.

This is going to be an interesting one to watch, as there’s another interesting element in the mix. It’s not just that there is genuine and deserved moral outrage over the content the chatbots are serving to children, putting real pressure on Newsom, who would be forced to explain why he passed up the opportunity to do something about an AI-abetted mental health crisis when he could. (There is a big nothingburger of a competing bill, SB 243—you can tell it’s a nothingburger, because Valley hacks like Kovacevich are embracing it—that claims to address the issue but is content to “label” AI content rather than keep it away from kids it could harm, that Newsom could sign instead.)

BUT. It appears that LEAD is supported by someone who is perhaps as influential to Gavin as any Valley lobbyist; Jennifer Siebel Newsom, the first partner of California. “Regulation is essential, otherwise we’re going to lose more kids,” she recently said, according to the Sacramento Bee. “I can’t imagine being one of these tech titans and looking at myself in the mirror and being OK with myself.”

The AI Safety Bill 2.0

Last year, Gavin Newsom controversially vetoed a bill authored by Scott Wiener, 1047, that was designed to limit catastrophic AI risk. Big AI companies hated the bill because it required a degree of transparency, and would have cost them some not insignificant time and money to comply with. This year, Wiener is back with SB 53, an extremely pared-down version that he developed in concert with the industry and the governor, and that requires AI companies to share “safety protocols” with the state and provide a way to report safety incidents.

This is all a little bit of a joke, if I’m being honest. The previous bill was at least a little interesting because it required AI companies to share data about their training models; which is of course why they were furious about it.

The new one just asks AI companies to file a progress report that basically says “here is how we are being careful” and puts in place a loose and rather unenforceable promise to tell the state about anything that goes wrong. No wonder the AI company Anthropic has endorsed it, and OpenAI hasn’t bothered to say much of anything about it except that it would prefer federal regulation. (Anthropic likely figures that it can score some points by trying to bolster its bona fides as the more ethical AI company, while OpenAI probably finds the fact that it has to dedicate a researcher or two to these safety reports kind of annoying, but nothing worth publicly opposing too much.)

Newsom has indicated that he’ll sign it. The bill does formalize protection for whistleblowers working for AI labs who report safety incidents, which, fine. Good. The best thing it does is lay the groundwork for CalCompute, a public compute cluster of data centers that enables public and nonprofit AI work, but we’ll see what actually comes of that.

Legalizing gig worker unions

In theory, making it legal for gig workers to form unions should be a great thing. But with 1340, the devil is in the details. The bill is nominally an effort to claw back some rights that were signed away when Prop 22 was voted into law, relegating gig workers as independent contractors, not employees, no matter how many hours they worked.

The big problem with 1340, along with the fact that it reduces Lyft and Uber’s insurance burden, is that the bill limits which union the workers can choose—using language that relegates that choice to “experienced” unions that cut out groups like Rideshare Drivers United that have been working to organize drivers on the ground for years, just with non-union status.

“We don’t think the bill is strong enough actually,” Nicole Moore, the head of RDU tells me, “and it doesn’t give drivers the chance to pick the organization of their choice. But we have to get out of this dungeon created by Prop 22 and the lawlessness of Lyft and Uber. But it’s legislation and they can make it stronger next round.”

The number one thing that Moore says is good about the new law is that it forces Lyft and Uber to share drivers’ ride data with the state, so it can confirm or deny that the companies are being honest about pay, hours, and standards.

“We’re in the cross hairs of algorithmic pricing,” Moore says, “that’s how they pay us, and they are looking for our lowest price point. We’re making less than federal minimum wage. And AI is responsible for how much we’re paid, how many gigs we get, and terminates us as well. It’s—literally—inhumane. Time to put the robots and algorithms and the tech oligarchs behind them in check.”

Other AI bills

There are some other bills on Newsom’s desk, like AB 316, the Artificial Intelligences: Defenses act, which would legally prevent someone from blaming an autonomous AI system for harms inflicted on another. That also seems like a no-brainer, and a guard against the way some managers like to use AI and automated systems as accountability sinks.

But the bills outlined above are the big ones. One way I can see this playing out is Newsom making a big deal about signing Wiener’s bill—the “catastrophic risk” bill that is so friendly to industry that Anthropic actively supports it—and using that as cover for vetoing the stronger bills. He might then sign SB 243, the toothless and largely performative alternative to the LEAD act, which would actually force AI companies to make serious design choices and technical adjustments to protect kids from their products.

If Newsom does this—sign SB 53, the “risk” bill and SB 243 the pretend-to-address AI products for children bill, and spikes the rest, it’s a bad sign.

“At some point, he has to step up and figure out what he’s willing to do to protect workers, jobs, safety, and privacy. And really, the jobs,” the California Labor Federation president Lorena Gonzalez says. “I think we’ll have a lot of stuff next year, and he’s really going to be tested.”

“Do we need to think about tech’s impact on society/community/people/workers?” Rideshare Drivers United organizer and Uber driver Nicole Moore says. “Yes. And we need to rein these mfs in. We’re letting them get way ahead of us. And not for innovation’s sake but profit and greed.”

Whatever happens, Gonzalez notes, as she’s out canvasing and talking with voters, she’s only seeing support for laws reining in tech grow.

“There’s actually an increase in appetite,” she says. “If you talk to voters, voters get it far more than legislators do. When you talk in particular to working class workers—I always say it’s a little ironic because the AI space and the tech space is already affecting white collar workers quicker, and sooner—but blue collar workers are much more likely to understand the threat that’s coming, and to want guardrails and protections. You can have innovation, you can have advances in technology, and still have guardrails, safety, privacy, and protections for their jobs. The more people see this bleeding into their workplaces, our momentum will only grow.”

She pauses, then adds, “It’s coming. We’re going to have this crisis, and elected officials are going to have to decide, are they representing their constituents—or are they representing big tech?”

Alright! Californians—and everyone else—be sure to do all you can to shout about these AI bills in the next two weeks. Some of the bills do move the needle in important ways, and while some are absolutely too friendly to Silicon Valley, we can read these bills as a sort of barometer as to what’s politically possible in our era of deepening tech oligarchy. If Newsom decides that there’s a political cost to letting big tech run wild and irreproachably dictate Californian’s digital lives—well that’s a start.

A quick shout to the fantastic work that CalMatters has been doing in covering the progress of state bills, and to Khari Johnson, who’s been on the tech law beat. Check out their bill tracker to follow along, or this rundown of top bills, both related to tech and otherwise. Also, stay tuned for something a little bit different this weekend; I may try to run a Blood in the Machine podcast episode, with the author of a great book on why we all really fear AI. We had a great chat and if I can figure out the tech and distribution I’ll push that out asap. As always, thanks everyone, and hammers up.

Yes, like the infamous IBM slide.

Isn’t this a rhetorical question?

😉

How about a law that bans using an AI to make laws?