Lost in the slop layer

How AI has encrusted our culture and social sphere in a sedimentary layer of slop.

One of the more perplexing things about the AI bubble is how relatively little we have to show for it. Mostly, it’s chatbots, some coding automation, and slop. A lot of slop.

You can spend hours reading through eye-popping Nividia earnings reports and lengthy columns expounding on the transformative powers of the technology and analysts’ takes on what may be the biggest bubble of our generation. That’s to say nothing of the breathless proclamations of tech executives, of course, or the federal government’s own enthusiastic overtures. But then you flip on Saturday Night Live and the first sketch they run after the monologue reminds you that a lot of the general public’s experience of AI is actually more like the one depicted here:

That is, as slop. Encountering awful AI slop (and startups with skin-crawling pitches like ‘what if we use AI to reanimate dead family members’), is an experience universal enough that Glen Powell acting out Sora-esque output gets top billing at SNL. Slop has broken through; slop is everywhere; slop is mainstream.

And it’s not just that everyone, regardless of demographic, has watched a discomfiting AI-generated video or heard about a bad AI startup. AI slop is on TV ads, it’s in video games, it’s on the pop charts. There is a tangible, tactile, and unavoidable slop layer that has encrusted pop culture and much of modern life.

If the slop explosion began in earnest in 2023, with the unleashing of ChatGPT and Midjourney, and it reached critical mass in 2024, then now, in 2025, we’re seeing that slop hardening into the outer shells of our cultural institutions and social spheres, like a layer of so much automata-inflected sedimentary rock. And I’m not one to pass up a metaphor, so let’s go all in why don’t we. Remember middle school geology? The US Geological Survey notes that sedimentary rock is “formed from pre-existing rocks or,” like slop, “pieces of once-living organisms.” The USGS also helpfully notes that sedimentary rocks have “distinctive layering or bedding.” You know it when you see it.

Now, in 2025, whenever we go to read a blog, flip on the TV, stream music, or pick up a PS5 controller, we are likely to encounter the slop layer. This agglomeration of content living and dead, marked by a faintly unpleasant aesthetic homogeneity, that evokes in us a weary inability to discern what’s real and what’s not. We wade through this tide of automated mediocrities and the ethical breaches they represent and consider the credibility of its component parts. Whether we want to or not, and mostly we do not, we must engage the slop layer.

A quick word before we go on: Instead of the usual entreaty to subscribe or to become a paid subscriber if you enjoy or find value from this work—which will continue to constitute oh 97% of my livelihood, and please do that if possible—I’m trying something new. That is, this is a sponsored post, from a good company offering a good service.

As you read this, or rather, immediately before you read this, when you were on Google or Facebook, browsing or shopping etc online, a host of mostly invisible companies were compiling data profiles of you. Today’s edition of BITM is sponsored by DeleteMe, whose raison d’etre is hunting down and, yes, deleting the data that brokers have hoovered up about you over the years and made available to their clients. DeleteMe is Wirecutter’s top-rated data removal service, and I’ve used it myself, to locate and eradicate 100 or so sites that were listing and selling personal info like my home address and phone number. If you’re interested in taking DeleteMe for a spin, sign up here and use the code LUDDITES for 20% off an annual subscription. OK! Onwards we go.

The slop economy has been going full steam for a year or two now. In 2023, 404 Media’s Jason Koebler wrote in a report about the rise of AI content on Facebook that the “once-prophesized future where cheap, AI-generated trash content floods out the hard work of real humans is already here.” In 2024, Max Read declared that the slop era had arrived. Pumped out primarily by content farmers, web marketing opportunists and AI boosters, the flood of slop was, for a while, largely contained within social media platforms, email spam filters, and clickbait websites. Now, those walls are being breached.

OpenAI’s launch of the AI video generator Sora 2 made slop easier than ever to produce; major copyrights holders sounded the alarm. Taylor Lorenz called it the AI Slop-pocalypse.

Then, last week, an AI-generated country music song by “Breaking Rust” notched the top spot on a(n admittedly obscure) Billboard chart (Digital Country Music Song Sales), and has racked up millions upon millions of views on Spotify.

It’s a generic, pretty terrible song that’s nonetheless not too out of step with the performatively masculine/hip hop-inflected/uncomfortably Imagine Dragon-adjacent trendlines in modern country pop. Once you hear it as slop, it’s impossible to unhear it as such. The top-voted comments on YouTube seem to agree:

The song gained popularity on Spotify, where it seems to have been recommended by the algorithm in Discover Weekly mixes, and where it was likely nodded along to in anonymity by millions who didn’t know or care who was behind the song. (This being a reminder that platform consolidation, and the social media networks and streaming services that want for cheap, voluminous content, produced the ideal conditions for generating the slop layer.) So it’s not like the song is an actual hit, it just blends unnervingly into the background, condensing the work of unnamed human recording artists into inoffensive, key-appropriate pap. It’s part of the slop layer.

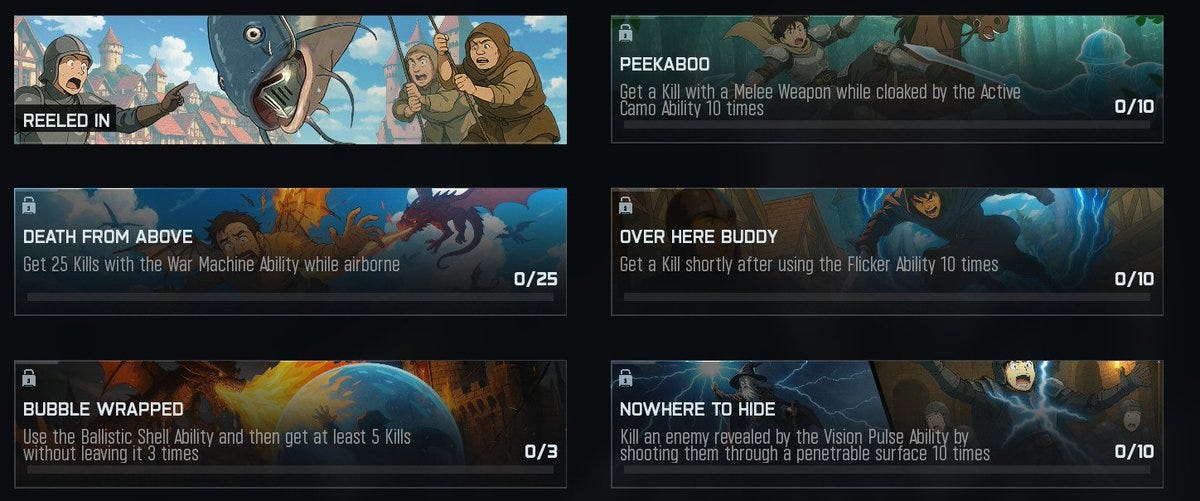

The same week that Breaking Rust was racking up Spotify plays, gamers were discovering that the newest installment of the blockbuster video game franchise Call of Duty was loaded with AI-generated art. The games blog FRVR explains (and also cites my 2024 WIRED piece on AI in the gaming industry, thanks FRVR):

Call of Duty has already been criticized for its reliance on AI-generated artwork in the past. 2023’s Modern Warfare 3 included an AI-generated calling card in its $15 cosmetic Yokai Wrath bundle, and Black Ops 6—last year’s entry—was lambasted for its use of a generated image of a zombie Santa, which infamously had six fingers instead of five.

This year, the shame gloves are off, and Activision has pushed customers face-first into the up-chuck of machine-generated visuals. There is an extreme amount of AI artwork used within the base version of the game, despite the fact that last year’s Black Ops 6 made over $1 billion in its first 10 days on sale—the highest-grossing CoD game ever.

One interesting thing about the slop in the Call of Duty game is that it *doesn’t even bother to disguise itself as slop*. Some of it’s made in the Ghibilified style that is all but shorthand for AI-generated slop, as this gamer flagged:

A particularly exposed chunk of the slop layer right there. Meanwhile, the hit new game Arc Raiders used generative AI instead of voice actors, causing an outrage of its own.

Speaking of outrage, artists and Disney fans were taken aback when CEO Bob Iger announced an incoming content deal that will allow Disney+ subscribers to mass produce their own Disneyified slop. Here’s the Hollywood Reporter:

“The other thing that we’re really excited about, that AI is going to give us the ability to do, is to provide users of Disney+ with a much more engaged experience, including the ability for them to create user-generated content and to consume user generated content — mostly short-form — from others,” Iger continued.

Nestled in that comment is a CEO’s dream; users paying corporations for the privilege of voluntarily producing cheap content that obviates the need to hire human artists to create more original works. The trade-off is of course that the vast the new Disney UGC will be pure slop, but so it goes.

Then there’s this AI-generated Coke ad, which began airing last week, too, and which the Verge’s Jess Weatherbed accurately described as a “sloppy eyesore.”

As Weatherbed noted, this is not Coca-Cola’s first rodeo in the slop arena, as it stirred controversy by making a bad AI-generated ad last year, too. And yet, these

blunders are seemingly worth the risk for Coca-Cola, with the company’s Chief Marketing Officer, Manolo Arroyo, telling The Wall Street Journal that its latest holiday campaign was cheaper and speedier to produce compared to traditional production. “Before, when we were doing the shooting and all the standard processes for a project, we would start a year in advance,” Arroyo told the publication. “Now, you can get it done in around a month.”

And therein we have the common denominator between all this slop, and I regret to inform you it’s not particularly revelatory: It’s cheap. It’s cheap as dirt to turn on the slop spigot to try to juice post views on Facebook or X or yes Substack, or to crank out a shitty robot country song. Every piece of Ghiblified video game art from a triple AI studio or AI-generated article on a media outlet’s website is the visual representation of slashed labor costs, the aesthetics of digital deskilling.

This is underlined by a study that found that if one trains a large language model exclusively on the output of professional writers, that model will be able to emulate the works of famous authors as well as said writers. The paper concludes that “the median fine-tuning and inference cost of $81 per author represents a dramatic 99.7% reduction compared to typical professional writer compensation.”

The slop layer would be offensive enough if it was limited to creative labor, but I’m afraid it coats even our social spaces now. What are chatbots but the automation of human interaction, or slop friends? Many people are now even channelling their sexual impulses through a particularly toxic brand of slop, as evidenced by the enormous and horrifying leak of user data from an erotic chatbot service that let its customers create deepfakes of celebrities, crushes, even their teachers. Others are being asked, a la Glen Powell to render AI-generated avatars of loved ones; slop memories.

The Atlantic writer Charlie Warzel recently described AI as “a mass delusion event,” noting the degree to which the technology has driven us to question more frequently whether something is real and what purpose it serves. And while it’s true that generative AI has been the purveyor of a great many delusions, many of them tragic, the broader and shallower effect may be a great cheapening. The slop layer is enshittification made total and constant.

It’s playing a Call of Duty sequel and staring at obviously AI art on a load screen, while your ChatGPT companion flatters you on an app on your phone and the statistical mean of a new country song autoplays on the speakers. It’s the replicated cultural artifacts, social interactions, and sexual fantasies all lathered onto reality, embedded with the telltale sediments of their plagiarism and automation.

This raises yet another question that feeds into the interminable AI bubble discourse: What if everyone gets sick of squirming? What if we cross a threshold where living in a perpetual, ambient uncanny valley produces a sustained backlash? It may even eventually become too obvious that the most ubiquitous export of the AI boom is a pervasive layer of slop that’s made everything look and feel worse, a feeling that could speed a downturn should investor’s bullish sentiment finally crack. But there’s a chance it doesn’t, and that is of course the fear. In the event that it does not, and this is the harder question, we’re all faced with asking how we might navigate, or confront, the slop layer that’s growing and hardening around us. Do we tolerate its creeping repugnance? Do we accept it as the latest encroachment of technological capitalism? Or do we reject it?

To that end, a couple notes and ideas for fighting/excavating the slop layer.

Fighting the slop Layer

Deana Igelsrud, the legislative & political advocate for the Concept Art Association, passes along word that a crucial hearing will be held for AB-412, proposed California legislation that would force AI companies to alert copyright holders when their works are used to train large language models, and give them the chance to opt out and a mechanism for obtaining compensation.

On December 8, 2025, the Assembly Privacy and Consumer Protection Committee and the Senate Judiciary Committee will be hosting a joint informational hearing on the topic of AI and Copyright at Stanford University. I’ll follow up on this email with an agenda as soon as it is finalized – in the meantime, some basic information is included below:

WHERE: Stanford University - Paul Brest Hall, 555 Salvatierra Walk, Stanford, CA 94305.

WHEN: Monday, December 8th at 10 AM.

WHO: Artists, creatives, and copyright owners who want to make their voices heard.

Over the course of this hearing we will learn about AI and copyright from a variety of artists, academics, technical and legal experts, policymakers, and representatives from the tech industry. At the end of the hearing there will be an opportunity for members of the public to speak for 1-2 minutes each. If you’re passionate about these issues, we’d love to see you there. No registration is required ahead of the event.

Excavating the slop layer

Meanwhile, Mike Pepi has an intriguing plan for excavating the slop layer, or at least helping to preserve the livelihoods of artists and creative workers it’s beginning to bury: A slop tax. I love it, check out the proposal here:

OK, that’s it for today. Thanks for reading everyone. Hammers up.

Great article and cathartic to read as always! I encountered the slop layer today when planning a road trip. Most top search results for “things to do in the Ozarks” are listicles from 2025 that unmistakably read like chatgpt. One was titled “Top 25” but only had 5 things. Eventually I remembered my usual trick of setting the search time period to “everything before 12/1/23,” and as usual, that’s when I found the good stuff.

On the Breaking Rust thing, one of the big record labels (UMG) recently reached a settlement with one of the AI-songwriting slop machines (Udio) whereby people who make slop music will have to keep it on the Udio platform (instead of being able to export it to streaming platforms like Spotify). IF this system works (a big "if"), and IF the other record labels and other slop machines reach similar settlements (another big "if"), it would help contain music slop within it's own slopiverse. I just wrote about this here https://egghutt.substack.com/p/ai-musicians-are-starting-to-replace if you're interested. It's just amazing how many battles have to be fought on so many fronts. Hammers up indeed.