AI Killed My Job: Tech workers

Tech workers at TikTok, Google, and across the industry share stories about how AI is changing, ruining, or replacing their jobs.

“What will AI mean for jobs?” may be the single most-asked question about the technology category that dominates Silicon Valley, pop culture, and our politics. Fears that AI will put us out of work routinely top opinion polls. Bosses are citing AI as the reason they’re slashing human staff. Firms like Duolingo and Klarna have laid off workers in loudly touted shifts to AI, and DOGE used its “AI-first” strategy to justify firing federal workers.

Meanwhile, tech executives are pouring fuel on the flames. Dario Amodei, the CEO of Anthropic, claims that AI products like his will soon eliminate half of entry level white collar jobs, and replace up to 20% of all jobs, period. OpenAI’s Sam Altman says that AI systems can replace entry level workers, and will soon be able to code “like an experienced software engineer.” Elsewhere, he’s been blunter, claiming "Jobs are definitely going to go away, full stop."

But the question remains: What’s actually happening on the ground, right now? There’s no doubt that lots of firms are investing heavily in AI and trying to use it to improve productivity and cut labor costs. And it’s clear that in certain industries, especially creative ones, the rise of cheap AI-generated content is hitting workers hard. Yet broader economic data on AI impacts suggests a more limited disruption. Two and a half years after the rise of ChatGPT, after a torrent of promises, CEO talk, and think pieces, how is—or isn’t—AI really reshaping work?

About a month ago, I put out a call in hopes of finding some answers. I had a vague idea for a project I’d call AI Killed My Job, that would seek to examine the many ways that management has used AI to impact, transform, degrade, or, yes, try to replace workers outright. It’s premised on the notion that we’ve heard lots of speculation and plenty of sales pitches for AI automation—but we have not heard nearly enough from the workers experiencing the phenomenon themselves.

The title is somewhat tongue-in-cheek; we recognize that AI is not sentient, that it’s management, not AI, that fires people, but also that there are many ways that AI can “kill” a job, by sapping the pleasure one derives from work, draining it of skill and expertise, or otherwise subjecting it to degradation.

So, I wrote a post here on the newsletter explaining the idea, posted a call to social media, and asked for testimonials on various news shows and podcasts. I was floored by the response. The stories came rolling in. I heard from lots of folks I expected to—artists, illustrators, copywriters, translators—and many I didn’t—senior engineers, managers of marketing departments, construction industry consultants. And just about everyone in between. I got so many responses, and so many good ones, that I resolved to structure the project as a series of pieces that center the workers’ voices and testimonies themselves, and share their experiences in their own words.

Because I got so many accounts, I decided to break down the articles by field and background. Starting, today, with an industry that’s at once the source of the automating technology and feeling some of its most immediate impacts. Today, we’ll begin by looking at how AI is killing jobs in the tech industry.

I heard from workers who recounted how managers used AI to justify laying them off, to speed up their work, and to make them take over the workload of recently terminated peers. I heard from workers at the biggest tech giants and the smallest startups—from workers at Google, TikTok, Adobe, Dropbox, and CrowdStrike, to those at startups with just a handful of employees. I heard stories of scheming corporate climbers using AI to consolidate power inside the organization. I heard tales of AI being openly scorned in company forums by revolting workers. And yes, I heard lots of sad stories of workers getting let go so management could make room for AI. I received a message from one worker who wrote to say they were concerned for their job—and a follow-up note just weeks later to say that they’d lost it.

Of the scores of responses I received, I’ve selected 15 that represent these trends; some are short and offer a snapshot of various AI impacts or a quick look at the future of employment. Others are longer accounts with many insights into what it means to work in tech in the time of AI—and what it might mean to work, period. The humor, grace, and candor in many of these testimonials often amazed me. I cannot thank those who wrote them enough. Some of these workers took great risks to share their stories at a time when it is, in tech, a legitimate a threat to one’s job to speak up about AI. For this reason, I’ve agreed to keep these testimonies anonymous, to protect the identities of the workers who shared them.

Generative AI is the most hyped, most well-capitalized technology of our generation, and its key promise, that it will automate jobs, desperately needs to be examined. This is the start of that examination.

Three very quick notes before we move on. First, this newsletter, and projects like AI Killed My Job, require a lot of work to produce. If you find this valuable, please consider becoming a paid subscriber. With enough support, I can expand such projects with human editors, researchers, and even artists—like Koren Shadmi, who I was able to pay a small fee for the 100% human-generated art above, thanks to subscribers like you. Second, if your job has been impacted by AI, and you would like to share your story as part of this project, please do so at AIkilledmyjob@pm.me. I would love to hear your account and will keep your account confidential as I would any source. Third, some news: I'm partnering with the good folks at More Perfect Union to produce a video edition of AI Killed My Job. If you're interested in participating, or are willing to sit for an on camera interview to discuss how AI has impacted your livelihood, please reach out. Thanks for reading, human, and an extra thanks to all those whose support makes this work possible. Tech is just the first industry I plan on covering; I have countless more stories in fields from law to media to customer service to art to share. Stay tuned, and onwards.

This post was edited by Mike Pearl.

“AI Generated Trainers”

Content moderator at TikTok.

I have a story. I worked for TikTok as a content moderator from August 2022 to April 2024, and though I was not replaced by AI, I couldn't help noticing that some of the trainers were.

In my first year, I would be assigned training videos that featured real people either reading or acting. These trainings would be viewed internally only, not available to the public. Topics could be things like learning about biases, avoiding workplace harassment, policy refreshers, and so on. In the early months of my time there, the trainings were usually recorded slideshows with humans reading and elaborating on the topics. Sometimes they were videos that included people acting out scenarios. Over time, the human trainers were replaced with AI by way of generated voices or even people going over the materials in the videos.

It was honestly scary to me. I don't know how to explain it. I remember that they had added embellishments to make them seem more human. I distinctly remember a woman with an obscure black tattoo on her bicep. The speech and movement wasn't as clean as what I see in videos now, but it was close enough to leave me with an eerie sensation.

As far as content moderation goes, much of that is already done by AI across all major social media platforms. There has historically been a need for human moderators to differentiate grey areas that technology doesn't understand. (Example: someone being very aggressive in a video and using profanity, but it not being directed at an individual. AI might think the video involves bullying another user and ban the video, but a moderator can review it and see that there's no problem/no targeted individual.)

It was honestly scary to me. I don't know how to explain it. I remember that they had added embellishments to make them seem more human.

I think as AI models continue to learn, however, moderators will be replaced completely. That's just a theory, but I'm already seeing the number of these job postings dwindling and hearing murmurs from former coworkers on LinkedIn about widespread layoffs.

“AI is killing the software engineer discipline”

Software engineer at Google.

I have been a software engineer at Google for several years. With the recent introduction of generative AI-based coding assistance tools, we are already seeing a decline in open source code quality 1 (defined as "code churn" - how often a piece of code is written only to be deleted or fixed within a short time). I am also starting to see a downward trend of (a) new engineers' readiness in doing the work, (b) engineers' willingness to learn new things, and (c) engineers' effort to put in serious thoughts in their work.

Specifically, I have recently observed first hand some of my colleagues at the start of their career heavily relying on AI-based coding assistance tools. Their "code writing" consists of iteratively and alternatingly hitting the Tab key (to accept AI-generated code) and watching for warning underlines 2 indicating there could be an error (which have been typically based on static analysis, but recently increasingly including AI-generated warnings). These young engineers - squandering their opportunities to learn how things actually work - would briefly glance at the AI-generated code and/or explanation messages and continue producing more code when "it looks okay."

I also saw experienced engineers in senior positions when faced with an important data modeling task decided to generate the database schema with generative AI. I originally thought it was merely a joke but recently found out that they basically just used the generated schema in actual (internal) services essentially without modification, even if there are some obvious glaring issues. Now those issues have propagated to other code that needs to interact with that database and it will be more costly to fix, so chances are people will just carry on, pretending everything is working as intended.

All of these will result in poorer software quality. "Anyone can write code" sounds good on paper, but when bad code is massively produced, it hurts everyone including those who did not ask for it and have been trusting the software industry.

“How AI eliminated my job at Dropbox”

Former staff engineer at Dropbox.

I was part of the 20% RIF at Dropbox at the end of October.3 The stated reason for this was to focus on Dash, their AI big-bet. The 10% RIF in 2023 was also to focus more on Dash.

How did this eliminate my job? Internal reprioritization, that's how. I was moving into an area that was scheduled to focus on improving Dropbox's reliability stance in 2025 and beyond, intended to be a whole year initiative. It's tricky to go into details, but the aim was to take a holistic view of disaster preparedness beyond the long standing disaster scenarios we had been using and were already well prepared for. Projects like this are common in well established product-lines like Dropbox's file-sync offering, as they take a comprehensive overview of both audit compliance (common criteria change every year) and market expectations.

This initiative was canned as part of the RIF, and the staffing allocated to it largely let go. Such a move is consistent with prioritizing Dash, a brand new product that does not have dominant market-share. Startups rarely prioritize availability to the extent Dropbox's file-sync product does because the big business problem faced by a startup is obtaining market-share, not staying available for your customers. As products (and companies) mature, stability starts gaining priority as part of customer retention engineering. Once a product becomes dominant in the sector, stability engineering often is prioritized over feature development. Dropbox file-sync has been at this point for several years.

With Dash being a new product, and company messaging being that Dash is the future of Dropbox, a reliability initiative of the type I was gearing up for was not in line with being a new product scrapping for market-share. Thus, this project and the people assigned to it were let go.

Blood in the Machine: What are you planning next?

This job market is absolutely punishing. I had a .gov job for the .com crash, a publicly funded .edu job for the 2008 crash, and a safe place inside a Dropbox division making money hand over fist during the COVID crash (Dropbox Sign more than doubled document throughput over 2020). This is my first tech winter on the bench, and I'm getting zero traction. 37 job apps in the months I've been looking, 4 got me talking to a human (2 of which were referrals), all bounced me after either the recruiter or technical screens. Never made it to a virtual onsite.

This has to do with me being at the Staff Engineer level, and getting there through non-traditional means. The impact is when I go through the traditional screens for a high level engineer I flame out, because that wasn't my job. The little feedback I've gotten from my hunt is a mix of 'over-qualified for this position' and 'failed the technical screen.' Attempting to branch out to other positions like Product Manager, or Technical Writer have failed due to lack of resume support and everyone hiring Senior titles.

I may be retired now. I'm 50, but my money guy says I've already made retirement-money; any work I do now is to increase lifestyle, build contingency funds, or fund charitable initiatives. The industry is absolutely toxic right now as cost-cutting is dominating everything but the most recently funded startups. We haven't hit an actual recession in stock-prices due to aggressive cost and stock-price engineering everywhere, and cost-engineering typically tanks internal worker satisfaction. I've been on the bench for six months, money isn't a problem. Do I want to stick my head back into the cortisol amplifier?

Not really.

“It's no longer only an issue of higher-ups: colleagues are using chatgpt to undermine each other.”

Tech worker, marketing department.

I used to work at a mid-sized Silicon Valley startup that does hardware. The overall project is super demanding, and reliant on skilled, hands-on work. Our marketing team was tiny but committed. My manager, the CMO4, was one of the rare ones: deeply experienced and seasoned in the big ones, thoughtful, and someone who genuinely loved his craft.

Last year, a new hire came in to lead another department. Genuinely believe she is a product of the "LinkedIn hustler / thought-leadership / bullshit titles" culture. Super performative.

Recently and during a cross-functional meeting with a lot of people present, she casually referred to a ChatGPT model she was fine-tuning as our "Chief Marketing Officer"—in front of my manager. She claimed it was outperforming us. It wasn’t—it was producing garbage. But the real harm was watching someone who’d given decades to his field get humiliated, not by a machine, but by a colleague weaponizing it.

Today, in the name of “AI efficiency,” a lot of people saw the exit door and my CMO got PIPd.5

The irony here is two-fold: one, it does not seem that the people who left were victims of a turn to "vibe coding" and I suspect that the "AI efficiency" was used as an excuse to make us seem innovative even during this crisis. Two, this is a company whose product desperately needs real human care.

[If your job has been impacted by AI, and you would like to share your story as part of this project, please do so at AIkilledmyjob@pm.me.]

“AI killed two of my jobs”

Veteran tech worker at Adobe and in the public sector.

AI killed my previous job, and it's killing my current one.

I used to work at Adobe... Despite constantly telling my team and manager I strongly disliked GenAI and didn't want to be involved in GenAI projects, when AI hype really started picking up, my team was disbanded and I was separated from my teammates to be put on a team specifically working on GenAI. I quit, because I didn't see any way for me to organize, obstruct, or resist without being part of building something that went against my values. I often wonder whether I should have let myself get fired instead. This was before we learned that Adobe trained Firefly on Stock contributions without contributors' opt-in, and before the Terms of Service debacle, so I'm glad I wasn't there for that at least.

Now I work in the public sector. It's better in most ways, but I have to spend ridiculous amounts of time explaining to colleagues and bosses why no, we can't just "use AI" to complete the task at hand. It feels like every week there's a new sales pitch from a company claiming that their AI tool will solve all our problems—companies are desperate to claw back their AI investment, and they're hoping to find easy marks in the public sector.

I don't want to be a curmudgeon! I like tech and I just want to do tech stuff without constantly having to call bullshit on AI nonsense. I'd rather be doing my actual job, and organizing with my colleagues. It's exhausting to deal with credulous magical thinking from decision-makers who should know better.

*My work at Adobe*

When I was at Adobe, I worked in Document Cloud. So like Acrobat, not Photoshop. For most of my time there, my job was evaluating machine learning models to see if they were good enough to put in a product. The vast majority of the time, Document Cloud leadership killed machine learning projects before they ended up in a product. That was either because the quality wasn't good enough, or because of a lack of "go-to-market.” In other words, middle and upper management generally did not accept that machine learning is only appropriate for solving a small subset of problems, which need to be rigorously-scoped and well-defined. They were looking for "everything machines" (these are derogatory air quotes, not a direct quote) that would be useful for huge numbers of users.

By the time AI hype really started to pick up, I had moved to a team working on internal tools. I wasn't building or evaluating machine learning models and I was outspoken about not wanting to do that. When LLM hype got really big, senior leadership started describing it as an "existential threat" (that is a direct quote as far as I remember), and re-organizing teams to get LLMs into Document Cloud as soon as possible. Adobe did not do *anything* quickly, so this was a huge change. A big red flag for me was that rather than building our own LLMs, Adobe used OpenAI's chatbots. When I asked about all of OpenAI's ethical and environmental issues, management made generic gestures towards being concerned but never actually said or did anything substantive about it. At that point I quit, because I had specifically been saying I didn't want to be involved in GenAI, and given the rushed and sloppy nature of the rollout, I didn't want my name anywhere near it.

*Colleagues' reactions*

Definitely I knew some colleagues who didn't like what Adobe was doing. There were a lot of people who privately agreed with me but publicly went along with the plan. Generally because they were worried about job security, but also there's a belief at Adobe that the company's approach to AI isn't perfect but it's more ethical than the competition. Despite being a huge company, teams were mostly isolated from each other, and as far as I know there wasn't a Slack channel for talking about AI concerns or anything like that. When I asked critical questions during department meetings or expressed frustration with leadership for ignoring concerns, people told me to go through the chain of command and not to be too confrontational.

Looking back, I wish my goal hadn't been to persuade managers but instead to organize fellow workers. I was probably too timid in my attempts to organize. I do regret that I didn't try having more explicit 1-on-1's about this, even though it would have been risky. Obviously I was very lucky/privileged to have enough savings to even consider quitting or letting myself get fired in this shitty job market, and I often wonder if I could have done more to combine strategies and resources with other colleagues so that fighting back would be less risky for everyone.

*Impact of AI on work*

When the GenAI push started, a lot more of my colleagues started working nights and weekends, which was rare (and even discouraged) before then. Managers paid lip service to Adobe's continuing commitment to work-life balance, but in practice that didn't match up with the sense of urgency and the unrealistic deadlines. I'm not aware of anyone who got fired or laid off specifically because of getting replaced by AI, and it looks like teams are still hiring. Although for what it's worth, in general Adobe does not do layoffs these days, but instead they pressure people into quitting by taking work away from them, putting them on PIPs, that kind of thing.

I found out that a colleague who had been struggling with a simple programming task for over a month—and refusing frequent offers for help—was struggling because they were trying to prompt an LLM for the solution and trying to understand the LLM's irrelevant and poorly-organized output. They could have finished the work in a day or two if they had just asked for help clarifying what they needed to do. I and their other teammates would gladly have provided non-judgmental support if they had asked.

Our team found out that a software vendor (I can't say which one but it was one of the big companies pushing Agentic AI) was using AI to route our service request tickets. As a result, our tickets were being misclassified, which meant that they were failing to resolve high-priority service disruptions that we had reported. We wasted days on this, if not weeks.

*My current job*

At my current job, I'm basically a combination of programmer and database administrator. I like the work way more than what I did at Adobe. Much like the corporate world, there are a lot of middle and upper managers who want to "extract actionable insights" from data, but lack the information literacy and technical knowledge to understand what they can (or should) ask for. And the people below them are often unwilling to push back on unreasonable expectations. It's very frustrating to explain to executives that the marketing pitches they hear about AI are not reflective of reality. It makes us seem like we're afraid of change, or trying to prevent "progress" and "efficiency."

So I would say the private and public sector have this in common: the higher up you go in the organization, the more enthusiastic people are about "AI,” and the less they understand about the software, and (not coincidentally) the less they understand what their department actually does. And to the extent that workers are opposed to "AI,” they're afraid of organizing, because it feels like executives are looking for reasons to cut staff.

“No crypto, no AI”

Tech worker.

So this is sort of an anecdote in the opposite direction of AI taking jobs—in a recent interview process at a mature startup in the travel tech space, part of the offer negotiations were essentially me stating “yeah I don’t want to work here if you expect me to use or produce LLM-based features or products” (this is relevant as the role is staff data scientist, so ostensibly on supply side of AI tooling), and them responding with “yeah if you want to do LLM work this isn’t the place for you.”

Though my network isn’t extensive, I feel like this is a growing sentiment in the small- and medium-tech space - my primary social media is on a tech-centric instance of the fediverse (hachyderm.io) and more often than not when I see the #GetFediHired hashtag, it’s accompanied by something akin to “no crypto, no AI” (also no Microsoft Teams, but I digress).

“Gradual addition of AI to the workplace”

Computer programmer.

Our department has now brought in copilot, and we are being encouraged to use it for writing and reviewing code. Obviously we are told that we need to review the AI outputs, but it is starting to kill my enjoyment for my work; I love the creative problem solving aspect to programming, and now the majority of that work is trying to be passed onto AI, with me as the reviewer of the AI's work. This isn't why I joined this career, and it may be why I leave it if it continues to get worse.

“my experience with AI at work and how I just want to make it do what I don't want to do myself”

Software engineer at a large tech firm.

All my life, I’ve wanted to be an artist. Any kind of artist. I still daydream of a future where I spend my time frolicking in my own creativity while my own work brings me uninterrupted prosperity.

Yet this has not come to pass, and despite graduate level art degrees, the only income I can find is the result of a second-class coding job for a wildly capitalist company. It’s forty hours a week of the dullest work imaginable, but it means I have time to indulge in wishful thinking and occasionally, a new guitar.

Real use cases where AI can be used to do work that regular old programming could not are so rare that when I discovered one two weeks ago, I asked for a raise in the same breath as the pitch.

I am experiencing exactly what you describe. There’s been layoffs recently, and my company is investing heavily in AI, even though they’re not sure yet how best to make it do anything that our corporate overlords imagine it should do.

From the c-level, they push around ideas about how we could code AI to do work, but in reality, those on the ground are only using AI to help write code that does the work, as the code always has. Real use cases where AI can be used to do work that regular old programming could not are so rare that when I discovered one two weeks ago, I asked for a raise in the same breath as the pitch.

And here I am, five hundred words into this little essay, and I’ve barely touched on AI! Nor have I even touched any of the AI tools that are so proudly thrust into my face to produce this. I’ve played around with AI tools for creative writing, and while they’re good at fixing my most embarrassing grammar errors, none of them have helped me in my effort to bridge the gap between my humble talent as a creative and my aspirations for my effort.

There’s a meme going on Pinterest that I believe sums up this moment: “We wanted robots to clean the dishes and do our laundry, so we could draw pictures and write stories. Instead they gave us robots to draw pictures and write stories, so we could clean dishes and do laundry.” This feels very true in the sense that human talent is getting valued not for the time it took to gain it and the ingenuity it proves, but for how well it feeds the greed of those who can afford to invest in bulk. But art in capitalism has always been this way, hasn’t it? If we don’t have a patron, we might as well eat our paint, and AI only tightens that grip that the privileged have held us in for centuries.

I’ve never been so fortunate to consider the work that funds my DoorDash addiction to be my passion’s output, and perhaps that’s why I’m not afraid of what I’ll lose. But it’s that same work that has me sharing notes with fellow programmers, and many of them will say with blunt honesty that they’re worried they’ll be replaced by AI. This is a vulnerability I rarely see from the group of people who often elevated their work as valuable and practical, as opposed to my efforts to learn how to make music and poetry, which were wasteful and useless. But I am like a plant that learned how to grow on rocks and eat insects. In a meeting soon, I’m going to level with them:

Don’t you understand? This work, what we do day in and day out for a soulless organization that drives profit from stealing our essence, this is the laundry! And if they think I’ll just throw that work into a machine and let it do all the work for me, they’re right. But it’s a machine that automates the work of running machines that automates the work that people used to do by hand, while constantly stealing glances at the clock, just waiting for the moment when they could be out from under the gaze of some righteous egomaniac.

Maybe this is just the perspective of someone who’s seen her work, of almost any type, get devalued with such regularity that it’s hard to imagine the robots making it any more difficult than it already is. No one’s ever really cared about my Instagram posts. No one pretends that my code will change the world. Perhaps, someday, I’ll make more money while babysitting on the weekends. I spend a lot of time thinking about things that haven’t worked out for me, and for us, as a society, and I think some of our worst failures come from moments when we can’t differentiate between the ability to use machines and our abilities as machines.

Last week I made a pie for my family, and I obviously didn’t get paid for it. Somewhere off in the offices of the illuminati, an account will calculate the value of the oven that baked the crust, the refrigerator that cooled the filling, the bougie pie dish that made my effort look food-blog ready. But there’s no monetary value in the work I did that literally put food on the table, and I rarely, if ever, get paid to perform the music I love, or receive more than pocket change for the short stories I publish. I keep thinking that the solution for both problems exist in some future innovation, but I can’t imagine what that invention would be, and I can’t find proof of a real connection between the two.

Maybe ChatGPT knows the answer to this riddle? I can throw a penny into our new philosophy vending machine, but I might come up with a better answer myself if I think about it while I unload the dishwasher.

PS I didn’t use ai to write this, also didn’t even bother to push it through an ai extruder to check the grammar. I guess I’m just feeling too lazy today to push that button! Have a nice weekend.

[If your job has been impacted by AI, and you would like to share your story as part of this project, please do so at AIkilledmyjob@pm.me.]

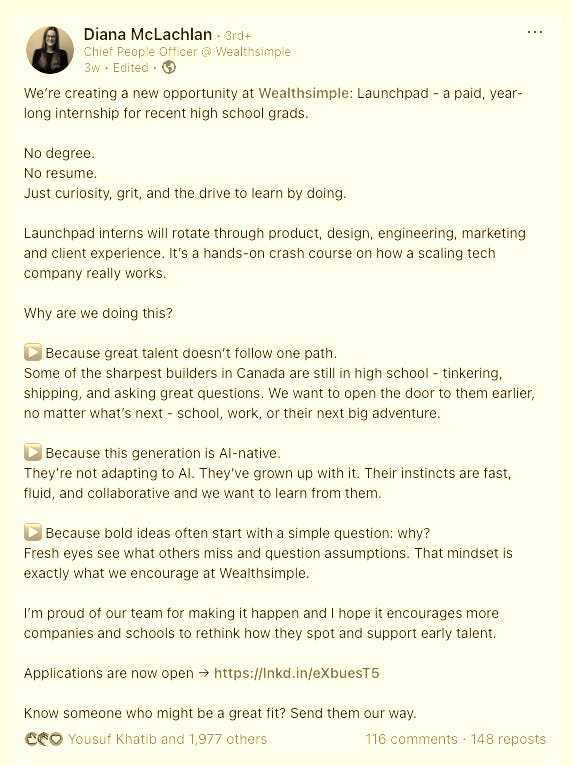

“AI-native high school interns”

Fintech worker.

Hello! I am a tech worker at a fintech. My workplace has been pushing AI really hard this year.

Here's the latest thing they thought up:

It 100% feels like testing the waters for just how unqualified and underpaid your workforce can be. Just as long as they can work the shovel of LLM they're good right?

The children yearn for the (LLM) mines!

“CrowdStrike”

Current CrowdStrike employee.

I work for CrowdStrike, the leading cybersecurity company in the United States. As a current employee, I can't reveal specific details about myself.

As you may have heard, CrowdStrike laid off 500 employees on May 7th, 2025. These were not underperformers. Many of them were relatively new hires. This action was presented as a strategic realignment with a special focus in "doubling down on our highest-impact opportunities," to quote CEO George Kurtz.

In the internal email, he states further:

AI investments accelerate execution and efficiency: AI has always been foundational to how we operate. AI flattens our hiring curve, and helps us innovate from idea to product faster. It streamlines go-to-market, improves customer outcomes, and drives efficiencies across both the front and back office. AI is a force multiplier throughout the business.

So, AI has literally killed many jobs at CrowdStrike this week. I'm fortunate to be among the survivors, but I don't know for how long.

Generative AI, particularly LLMs, is permeating every aspect of the company. It's in our internal chats. It's integrated into our note-taking tools. It's being used in triage, analysis, engineering, and customer communications. Every week, I'm pinged in an announcement that some new AI capability has been rolled out to me and that I am expected to make use of it. Customers who are paying for live human service packages from us are increasingly getting the output of an LLM instead. Quality Assurance reviewers have started criticizing reviewees for failing to run things through AI tools for things as trivial as spelling and grammar. Check out the front page and count the number of times "AI" is mentioned. It didn't used to be like this.

CrowdStrike is currently achieving record financials. At the time I write this, CRWD is trading at $428.63 in striking range of the stock's 52-week high. The efforts of my colleagues and I to rebuild from the incident of July 19, 2024 have been rewarded with shareholder approval and 500 layoffs. Some of the impacted individuals were recent graduates of 4-year schooling who, in addition to student loans, have moving expenses because they physically relocated to Texas shortly before this RIF occurred.

Many lower-level employees at CrowdStrike are big fans of generative AI; as techy people in a techy job, they fit the bill for that. Even so, many of them have become wary… of what increased AI adoption means for them and their colleagues. Some of the enthusiastic among them are beginning to realize that they're training the means of additional layoffs—perhaps their own.

CrowdStrikers have been encouraged to handle the additional per capita workload by simply working harder and sometimes working longer for no additional compensation on either count. While our Machine Learning systems continue to perform with excellence, I have yet to be convinced that our usage of genAI has been productive in the context of the proofreading, troubleshooting, and general babysitting it requires. Some of the genAI tools we have available to us are just completely useless. Several of the LLMs have produced inaccuracies which have been uncritically communicated to our customers by CrowdStrikers who failed to exhibit due diligence. Those errors were caught by said customers, and they were embarrassing to us all.

I would stop short of saying that the existence of genAI tools within the company is directly increasing the per capita workload, but an argument could be made of it indirectly accomplishing that. The net result is not a lightening of the load as has been so often promised.

Morale is at an all-time low. Many survivors have already started investigating their options to leave either on their own terms or whenever the executives inevitably decide an LLM is adequate enough to approximately replace us.

The company is very proud of its recognitions as an employer. As CrowdStrikers, we used to be proud of it too. Now we just feel betrayed.

“Coding assistants push”

Software engineer, health tech startup.

I work as a software engineer and we've been getting a push to adopt AI coding assistants in the last few months. I tried it, mostly to be able to critique, and found it super annoying, so I just stopped using it. But I'm starting to get worried. Our CEO just posted this in an internal AI dedicated Slack channel. The second message is particularly concerning.

[It’s a screenshot of a message containing a comment from another developer. It reads:]

"I am sufficiently AI-pilled to think that if you aren't using agentic coding tools, then you are the problem. They are good enough now that it's a skills issue. Almost everyone not using them will be unemployed in 2 years and won't know why (since they're the ones on Hacker News saying "these tools never work for me!" and it turns out they are using very bad prompts and are super defensive about it)."

We've had some layoffs long before this AI wave and the company has not picked up the pace in terms of hiring since. I'm sure now they're thinking twice before hiring anyone though. The biggest change was in how the management is enthusiastically incentivizing us to start using AI. First they offered coding assistants for everyone to use, then the hackdays we had every semester turned into a week long hackathon specifically focused on AI projects.

Now we have an engineer, if you can call him that, working on a project that will introduce more than 30k lines of AI generated code into our codebase, without a single unit test. It will be impossible to do a proper code review on this much code and it will become a maintenance nightmare and possibly a security hazard. I don't need to tell you how much management is cheering on that.

“My job hasn't been killed, yet”

Front end software engineer at a major software company.

My job hasn't been killed yet, but there's definitely a possibility that it could be soon. I work for a major software company as a front end software engineer. I believe that there's been AI-related development for about a year and a half. It's a little hard to nail down exactly because I'm one of the few remaining US-based developers and the majority of our engineering department is in India. The teams are pretty siloed and the day-to-day of who's on what teams and what they're doing is pretty opaque. There's been a pretty steady increase of desire and pressure to start using AI tools for a while now. As a result, timelines have been getting increasingly shorter, likewise the patience of upper management. They've tried to create tools that would help with some of the day-to-day repeatable UI pieces that I work on, but the results were unusable from my end and I found that I can create them on my own in the same amount of time.

The agents themselves had names and AI-generated profile pictures of minorities that aren't actually represented in the upper levels of the company, which I find kind of gross.

Around October/November of last year, the CEO and President (who's the former head of Product) had decided to go all-in on AI development and integrate it in all aspects of our business. Not just engineering, but all departments (Sales, Customer Operations, People Operations, etc). Don't get a ton of insight from other departments other than I've heard that Customer Ops is hemorrhaging people and the People Ops sent an email touting that we could now use AI to write recognition messages to each other celebrating workplace successes (insulting and somewhat dystopian). On the engineering side, I think initially there was a push to be an AI leader in supply chain, so there were a lot of training courses, hackathons and (for India) AI-focused off-sites where they wanted to get broad adoption of AI tools and ideas for products that we can use AI in.

Then in February, the CEO declared that what we have been doing is no longer a growth business and we were introducing an AI control tower and agents, effectively making us an AI first company. The agents themselves had names and AI-generated profile pictures of minorities that aren't actually represented in the upper levels of the company, which I find kind of gross. Since then, the CEO has been pretty insistent about AI in every communication and therefore there's an increased downward pressure to use it everywhere. He has never been as involved in the day-to-day workings of the company as he has been about AI. Most consequential is somewhere he has gotten the idea that because code can now be generated in a matter of minutes, whole SAS6 applications, like the ones we've been developing for years, can be built in a matter of days. He's read all these hype articles declaring 60-75% increase in engineering productivity. I guess there was a competitor in one of our verticals that has just come on the scene and done basically what our app can do, but with more functionality. A number things could explain this, but the conclusion has been that they used AI and made our app in a month. So ever since then, it's been a relentless stream of pressure to fully use AI everywhere to "improve efficiency" and get things out as fast as possible. They've started mandating tracking AI usage in our JIRA stories7, the CEO has led Engineering all-hands8 (he has no engineering background), and now he is mandating that we go from idea to release in a single sprint (2 weeks) or be able to explain why we're not able to meet that goal.

I've been working under increasingly more compressed deadlines for about a year and am pretty burned out right now, and we haven't even started pushing the AI warp speed churn that they've proposed recently. It's been pretty well documented how inaccurate and insecure these LLMs are and, for me, it seems like we're on a pretty self-destructive path here. We ostensibly do have a company AI code of conduct, but I don't know how this proposed shift in engineering priority doesn't break every guideline. I'm not the greatest developer in the world, but I try to write solid code that works, so I've been very resistant to using LLMs in code. I want my work to be reliable and understandable in case it does need to be fixed. I don't have time to mess around and go down rabbit holes that the code chatbots would inevitably send me down. So I foresee the major bugs and outages just sky-rocketing under this new status quo. How they pitch it to us is that we can generate the code fast and have plenty of time to think about architecture, keep a good work/life balance, etc.

But in practice, we will be under the gun of an endless stream of 2 week deadlines and management that won't be happy at how long everything takes or the quality of the output. The people making these decisions love the speed of code generation but never consider the accuracy and how big the problem is of even small errors perpetuated at scale. No one else is speaking up to these dangers, but I feel like if I do (well, more loudly than just to immediate low-level managers), I'll be let go. It's pretty disheartening and I would love to leave, but of course it's hard to find another job competing with all the other talented folks that have been let go through all this. Working in software development for so long and seeing so many colleagues accept that we are just prompt generators banging out substandard products has been rough. I'm imagining this must be kind of what it feels like to be in a zombie movie. I'm not sure how this all turns out, but it doesn't look great at the moment.

The one funny anecdote during all this AI insanity is they had someone from GitHub demo do a live presentation on Co-Pilot and the agents. Not only was everything he demoed either unreliable or underwhelming, but he could not stop yawning loudly during his own presentation. Even the AI champions are tired.

Less than a month later, the engineer emailed me a followup.

And I just got laid off yesterday. The reason cited was they need full stack developers and want engineers that are in India, and not for performance. My front-end-focused position was rendered obsolete. Very plausible since they definitely prefer hiring young and less expensive developers abroad. So AI is not technically the direct cause, but definitely a factor in the background. They'll hire a bunch of new graduates to churn out whatever AI solutions that they think they can hype. Annoyingly, they did announce two new AI agents yesterday, again with faces and names of women. The positive is that they did give me a decent severance, so in the short term I'm fine, financially but also that I don't have to deal with the pressure of ridiculous deadlines.

“AI experience”

Edtech worker.

I work for a small edtech startup and do all of our marketing, communications, and social media. I've always enjoyed doing our ed policy newsletter and other writing related projects. My boss absolutely loves AI, but until recently I'd been able to avoid it. A few weeks ago, my boss let me know that all of my content writing would now be done on ChatGPT so I would have more time to work on other projects. He also wants me to use AI to generate images of students, which I've luckily been able to push back on.

Although he says it's a time saver, I don't actually have other projects, so not only am I creating complete slop, but I'm also left with large amounts of time to do nothing. Being forced to use AI has turned a job I liked into something I dread. As someone with a journalism background, it feels insulting to use AI instead of creating quality blog posts about education policy. Unfortunately, as a recent grad, I haven't had much luck finding another job despite applying to hundreds, so for now I have to make do with the situation, but I will say that having to use AI is making me reconsider where I'm working.

“AI makes everything worse”

Senior developer at a cloud company.

I work for a cloud service provider (who will retaliate if you don't post this anonymously, unfortunately), and they're absolutely desperate for the current AI fad to be useful for something.

They're completely ignoring the environmental costs (insane power requirements, draining lakes of freshwater for cooling, burning untold CPU and GPU hours that could be dedicated to something useful instead) because there's a buck to be made. They hope. But they're still greenwashing the company of course.

For cloud companies, AI is a gold rush; until the bubble bursts, they can sell ridiculous amounts of expensive server time (lots and lots of CPU/GPU/memory/storage) and tons of traffic to and from the models. They're selling shovels to the gold miners, and are in a great position to charge rent if someone strikes a vein of usefulness.

I can see a scenario coming fast that's going to set back software development by years

But they're desperate for this to keep going. They're demanding we use AI for literally everything in our jobs. Our managers want to know what we're using AI for and what AI "innovations" we've come up with. If we're not using AI for everything, they want to know why not. I don't think we're quite at the point of this being part of our performance evaluations, but the company is famously opaque about that, so who knows. It's certainly something the employees worry about.

My work involves standards compliance. Using AI for any part of it will literally double our work load because we'll have to get it to do the thing, and then carefully review and edit the output for accuracy. You can't do compliance work with vibes. What's the point of burning resources to summarize things when you need to review the original and then the output for accuracy anyway?

I can see a scenario coming fast that's going to set back software development by years (decades? who knows!):

C-suite: we don't need these expensive senior developers, interns can code with AI

C-suite: we don't need these expensive security developers, AI can find the problems

senior developers are laid off, or quit due to terrible working conditions (we're already seeing this)

they're replaced with junior developers, fresh out of school... cheap, with no sense of work-life balance, and no families to distract them

all the vibe coding goes straight to production because, obviously, we trust the AI and don't know any better; also we've been told to use AI for everything

at some point, all the bugs and security vulnerabilities make everything so bad it actually starts impacting the bottom line

uh oh, the vibe coders never progressed beyond junior skill levels, so nobody can do the code reviews, nobody can find and fix the security problems

if all the fired senior developers haven't retired or found other jobs (a lot of these people want to get out of tech, because big tech has made everything terrible), they'll need to be hired back, hopefully at massive premiums due to demand

If these tools were generally useful, they wouldn't need to force them on us, we'd be picking them up and running with them.

At first, the exec’s AI speech was greeted by the typical heart-eyes and confetti emojis, but then I saw there were a few thumbs-down emojis thrown into the mix. This was shocking enough on its own, but then the thumbs-downs multiplied, tens and hundreds of them appearing on the screen, making those few little confettis seem weak and pathetic. I was already floored at this point, and then someone posted the first tomato…

“then came the tomatoes”

Tech worker at a well-known tech company.

I work at a fairly well-known tech company currently trying to transform itself from a respected, healthy brand to a win-at-all-costs hyperscaler. The result has mostly been a lot of bullshit marketing promises pegged to vaporware, abrupt shifts in strategy that are never explained, and most of all, the rapid degrading of a once healthy, candid corporate culture into one that is intolerant of dissent, enforces constant positivity, and just this week, ominously announced that we are “shifting to a high-performance culture.”

The company leadership also recently (belatedly) declared that “we are going all in on AI.”

I don’t use AI. I morally object to it, for reasons I hardly need to explain to you. And now I feel like I’m hiding plain sight, terrified someone will notice I’m actually doing all my own work.

We’re hiring for new roles and have been explicitly told that no candidate will be considered for *any* job unless they’re on board with AI. Every department has to show how they’re “incorporating AI into their workflows.” I heard through the grapevine that anyone so much as expressing skepticism “does not have a future with the company.”

It is pretty bleak. I’d leave, but I keep hearing it’s the same everywhere.

But then something insane happened.

At the most recent company all-hands, typically the site of the post painful sycophancy, one of our executives gave a speech formally announcing our big AI gambit. The meeting is so big that there is no Zoom chat, so people can only directly react via emojis. At first, the exec’s AI speech was greeted by the typical heart-eyes and confetti emojis, but then I saw there were a few thumbs-down emojis thrown into the mix. This was shocking enough on its own, but then the thumbs-downs multiplied, tens and hundreds of them appearing on the screen, making those few little confettis seem weak and pathetic. I was already floored at this point, and then someone posted the first tomato. It caught on like wildfire until there were wave after wave of virtual tomatoes being thrown at the executive’s head—a mass outcry against being forced to embrace AI at gunpoint. He tried to keep going but his eyes kept darting to the corner of his screen where the emojis appeared, in increasing panic.

It was goddamn inspiring. And while the executives didn’t immediately abandon all their AI plans, they are definitely shaken by what happened, and nervous about mass dissent. As they should be.

Thanks again to every tech worker who shared their story to me, whether it was included here or not—and to every worker who has written in to AIkilledmyjob@pm.me, period. I intend to produce the next installment in coming weeks, so subscribe if below if that’s of interest. And if you’d like to support this work, and receive the paywalled Critical AI reports and special commentary, please consider becoming a paid subscriber. My wonderful paid subscribers are the only reason I am able to do any of this. A million thanks.

Finally, one more time with feeling: If your job has been impacted by AI, and you would like to share your story as part of this project, please do so at AIkilledmyjob@pm.me. If you’re willing to participate in an on-camera interview, contact us at AIkilledmyjob@perfectunion.us. Thanks everyone—until next time.

The two footnotes in this account were provided by the worker, and are presented exactly as shared. The first is this link: https://www.gitclear.com/coding_on_copilot_data_shows_ais_downward_pressure_on_code_quality

like how Google Docs signals typos, an example: https://superuser.com/questions/1796376/getting-rid-of-the-red-squiggly-underline-on-errors

Editor’s note: RIF is “reduction in force.”

Ed: Chief Marketing Officer.

Ed: PIP stands for Performance Improvement Plan — in tech, getting a PIP, or PIPd, is like getting an official warning that you’re underperforming and thus more likely to get terminated.

Ed: Software as a service.

Ed: All-hands are meetings where everyone from the entire company or department are required to attend.

''I feel like I’m hiding plain sight, terrified someone will notice I’m actually doing all my own work.'' What a backwards world we live in.

This needs to be repeated, highlighted and shoved in every AI sycophant, Wall Street stock monkey or big tech executives face till they are forced to counter it:

Forced adoption is not adoption. Just because you have faked the metric does not mean you have made it.

Bullshit metrics rigging is the name of the game now. Wall Streets, pro-monopoly, pro-fascist and anti-worker machine of auto-pumping any stock that monopolises, lays off workers and "adopts AI" has empowered the cabal of Mckinseyists, C-execs and technofascists against the people.

First it was monthly active users: cue AI companies giving away products for free, or forcing them in products that already have success. The desperation at Google to brag about MAU after forcing it in Google search, Android, Lens and soon to be Gmail. On its own, the Gemini app is a complete flop.

Then it was tokens, a bullshit metric when one realises, a) adoption has been forced (workers forced to use code assistants, Google auto-generating AI overviews in search) and b) token ouput has increased by 100x since the release of "reasoning" models.

Now its adoption. Don't you know, 30% of all code is now AI generated (oh we've included auto-complete in that wink wink). Workers are adopting AI faster than any prior technology!, the McKinseyist says. No shit, execs are literally threatening to fire people if they don't use it. A farce if there ever was one.

The reason they are hopping desperately from one metric to another, is because for 3 years and still to this day, they have been unable to address the elephant in the room.

AI is not valued by the people and makes little to no money. $600B+ spent and <$20B revenue. Take away the foundation models, and its <5B revenue. The best selling AI SaaS product is a code assistant making 0.5B revenue. MSFT Azure AI is making as little as $2B. MSFT, Google, Salesforce, Adobe have pulled their standalone AI products and now forcefully bundle AI alongside a mandatory price increase due to how bad the sales were as a standalone.

They run away from revenue and profit metrics because it shows the world how much of a flop their project has been. Anytime you want an AI exec or shill to squirm just ask these two things.

Its time for workers and the people to form a class, a class far more powerful, against the machine. Across so many industries, people have not only rejected AI slop but actively hate on it and try to ruin its image: see the recent humiliation of Klarna, Suno, Duolingo, MSFT Gaming and Meta. It is working. Public backlash breeds more public backlash. A wonderful flywheel of AI hate.

The people continuing to reject AI will kill the AI industry. Most the money has to eventually come from the consumer.