The great undermining of the American AI industry

How a Chinese app built by a hedge fund has upended not just Silicon Valley, but an economy increasingly tethered to a story about AI

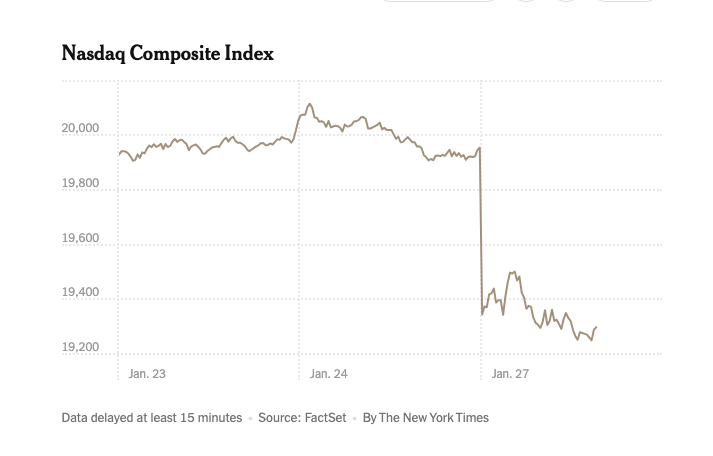

Well this week’s off to another wild start. The stock market is falling, investors are panicking, and some of the most valuable companies in the world, particularly Nvidia and the AI-invested tech giants, are seeing hundreds of billions of dollars erased from their valuations. (At one point Nvidia shed 17% of its value, or $568 billion, in less than a day, in a new record loss in market history—though, much like we see new hottest-year records smashed rather routinely thanks to climate change, we see these staggering losses more frequently these days, too, which one might argue is not great, but I digress.)

As always, thanks for reading and subscribing. If you find this writing and analysis valuable, please do chip in for oh the cost of a cup of coffee a month. This work is 100% reader supported, and I deeply appreciate all of you who make it possible.

The catalyst, if you have not yet heard, is a Chinese company called DeepSeek that released an AI chatbot app that’s just about on par with the American companies’ products. (Remarkably, the company was spun out of an effort to build a stock-picking algorithm.) The catch is, according to the company’s researchers, DeepSeek trained its open source model for $5.5 million, compared to the $100 million Altman has said it cost to train OpenAI’s GPT-4. It reportedly matches the performance of OpenAI’s o-1 mini at 1/50th the cost. And it’s popular, too. DeepSeek is the number one most downloaded app in the App Store, topping ChatGPT.

So what’s going on here? And why does this matter? I’ll have a bit more to say on this later, but essentially, as long as DeepSeek’s fundamentals are born out, the research paper the company published holds up to scrutiny, and the app stays popular (and is not say banned by the state like certain other high profile Chinese-made apps), then what we are seeing can be considered a Great Undermining of the American AI industry’s foundational assumptions. Yes, in all bold.

Take, for example, the big AI news of just last week: Sam Altman, Donald Trump, the massive investment firm Softbank, and other partners announcing Stargate, a $500 billion project to build out data centers and other AI infrastructure. (The project was largely already in place, pre-inauguration, though it did not have such an absurd price tag associated to it, and was under construction in Texas; the announcement was widely seen as a way to flatter Trump, and perhaps to jockey for now and future state support.)

The reason that the idea of spending $500 billion on data centers for training and running chatbots was not immediately laughed out of the room is that the largest American AI companies have spent the last two years advancing a very specific case. As I’ve documented in detail, both in this newsletter, in columns for the Times, and maybe most comprehensively in this report for AI Now, the modern AI industry, as led by OpenAI and Sam Altman, has always been dependent on a narrative, and the assumptions that undergird it: Generative AI is the future, it’s improving rapidly, and thanks to that we’re on the road to Artificial General Intelligence. AGI, in turn, will enable enterprise software to “outperform humans at most economically valuable work,” as OpenAI puts it in the company’s charter. Many executives and managers very much want this.

But the models need to be scaled first.

Those models need ever more data, they need more compute power, and they need more energy to run. This is why OpenAI says it’s raising historic amounts of capital, why it lobbied the Biden administration for support in building data centers, and why it says it shifted from a nonprofit structure to a for-profit one in the first place. It simply needed massive amounts of resources, and like its competitors, would need more and more for the foreseeable horizon, until the fabled AGI was brought to fruition.

The success of DeepSeek, if it holds, undermines these assumptions; assumptions which undergird essentially the entire American tech sector’s approach to AI. As the reporter Karen Hao notes in a long thread on Bluesky,

Much of the coverage has been focused on US-China tech competition. That misses a bigger story: DeepSeek has demonstrated that scaling up AI models relentlessly, a paradigm OpenAI introduced & champions, is not the only, and far from the best, way to develop AI.

Thus far OpenAI & its peer scaling labs have sought to convince the public & policymakers that scaling is the best way to reach so-called AGI. This has always been more of an argument based in business than in science.

There is empirical evidence that scaling AI models can lead to better performance. For businesses, such an approach lends itself to predictable quarterly planning cycles and offers a clear path for beating competition: Amass more chips.

The problem is there are myriad huge negative externalities of taking this approach - not least of which is that you need to keep building massive data centers, which require the consumption of extraordinary amounts of resources.

Now that a company has figured out a way to produce an AI app that’s just as effective at producing satisfactory output as the big American companies, at a sliver of the cost, a $500 billion data center facility in the desert suddenly seems like an offensive boondoggle. And it’s caught the stars of the AI tech world flatfooted, apparently. The typically noisy Sam Altman hasn’t tweeted for two days, at the time of this writing. Elon Musk has yet to mention DeepSeek, either.

It’s worth underlining a couple things here. First, generative AI long seemed destined to become a commodity; that ChatGPT can be so suddenly supplanted with a big news cycle about a competitor, and one that’s open source no less, suggests that this moment may have arrived faster than some anticipated. OpenAI is currently selling its most advanced model for $200 a month; if DeepSeek’s cost savings carry over on other models, and you can train an equally powerful model at 1/50th of the cost, it’s hard to imagine many folks paying such rates for long, or for this to ever be a significant revenue stream for the major AI companies. Since DeepSeek is open source, it’s only a matter of time before other AI companies release cheap and efficient versions of AI that’s good enough for most consumers, too, theoretically giving rise to a glut of cheap and plentiful AI—and boxing out those who have counted on charging for such services.

Second, this recent semi-hysterical build out of energy infrastructure for AI will also likely soon halt; there will be no need to open any additional Three Mile Island nuclear plants for AI capacity, if good-enough AI can be trained more efficiently. This too, to me, seemed likely to happen as generative AI was commoditized, since it was always somewhat absurd to have five different giant tech companies using insane amounts of resources to train basically the same models to build basically the same products.

What we’re seeing today can also be seen as, maybe, the beginning of the deflating of the AI bubble, which I have long thought to only be a matter of time, given all of the above, and the relative unprofitability of most of the industry. Just look at how these developments are shaking the whole industry. Here’s the New York Times:

“The market naturally will worry about demand growth in computing power,” analysts at Jefferies wrote in a note. DeepSeek’s apparent breakthroughs on cost and efficiency “could prompt investors to ask hard questions” of tech leaders about their weighty investments in chips and data centers, they added.

The turmoil also hit the stocks of utility companies that have opened new lines of business serving the voracious power needs of data centers. Constellation Energy plunged nearly 20 percent, partly reversing a long-running rally that has seen the power producer’s stock more than double over the past 12 months.

U.S. Treasury yields fell sharply, as they often do when investors seek havens during times of turbulence. Yields move inversely to the price of debt, meaning that the value of Treasuries jumped on Monday.

That weighed on the value of the dollar, which slipped against a basket of currencies of major U.S. trading partners. These moves faded somewhat in the afternoon as investors refocused on this week’s meeting of Federal Reserve officials, who are expected to pause their campaign of interest-rate cuts.

This is a truly fascinating moment. Things could go in so many different ways: Will tech stocks continue to tumble? Will it become clear that Silicon Valley is over-invested in a very resource-intensive strategy, perhaps needlessly? What then? Will the talk turn more pointedly Sinophobic, both within the industry and out, in an effort to try to demonize the company and its approach, as politicians, lawmakers, and industry insiders did with TikTok? Will Silicon Valley AI stalwarts like Altman be able to muster a convincing enough argument to keep the money flowing into their increasingly outsized enterprises? Will OpenAI and Anthropic press their case with the state and drive even harder to become too big to fail, angling for government contracts? What happens to the current construction of the AGI mythology, if it turns out a Chinese startup can make a serviceable AI clone for cheap? Does this puncture the armor of the AGI investment complex, and start to steer backers and partners away for real? Is the AGI fever breaking?

And why, we’ll be left wondering, regardless how the dust settles, with the vast majority of resources for AI research concentrated in the United States, was it a Chinese hedge fund’s side project that unearthed this leap in efficiency? Could it be that the major AI players were more interested in fortifying their approach of more-is-better, broadening the scope of their project, and accumulating power, than attempting to innovate in a way that makes AI more nimble, more democratized, to use one of the industry’s preferred terms, and more efficient? Could it be that Lina Khan was right all along to want to break up the tech giants to encourage innovation?

I guess we’ll see soon enough.

We have the same question re: whether OpenAI was chasing the money because they needed it, or because nobody understands what they're doing well enough to know what it should cost and therefore OpenAI capitalized on that ignorance? My bet is on the latter because that's how tech VCs work. What an interesting development! I'm not sure it's great that we've been beaten in this race because unconstrained AI is bad for everyone. It's just a matter of time before we have a "lab leak" and given our current administration, we won't hear about it until we're scrambling to survive whatever form that takes. Hammers up! 😬

Whenever there's top-down innovation, never trust the bloated moat-defending incumbents who prioritize scale before solution. This is true for anything involving tech innovation, not just AI.