How big tech is force-feeding us AI

Plus, OpenAI's absurd listening tour, top AI scientists say AI is evolving beyond our control, Facebook is putting data centers in tents, and the AI bubble question — answered?

I do not think it will shock anyone to learn that big tech is aggressively pushing AI products. But the extent to which they have done so might. The sheer ubiquity of AI means that we take for ground the countless ways, many invisible, that these products and features are foisted on us—and how Silicon Valley companies have systematically designed and deployed AI products onto their existing platforms in an effort to accelerate adoption.

It also happens to be the subject of a new study by design scholars Nolwenn Maudet, Anaëlle Beignon, and Thomas Thibault, who looked at hundreds of instances of how AI has been deployed, highlighted, and advertised by Google, Meta, Adobe, SnapChat, and others, and analyzed them for a study called “Imposing AI: Deceptive design patterns against sustainability.” They also present the results in a handy guide, with illustrated examples called, aptly: “How tech companies are pushing us to use AI.” (It’s translated from the French, hence the sometimes awkward phrasings.)

The study is a stark reminder that AI has reached ubiquity not necessarily because users around the globe are demanding AI products, but for reasons often closer to the opposite.

As always, a quick reminder that BLOOD IN THE MACHINE is a fully independent publication, and my work is made possible 100% by paid supporters. If you find value in this work, and you’re able to, consider becoming a paid supporter. You’ll also get access to the Critical AI Report, included under the paywall below, which today takes a look at OpenAI’s weird listening tour, top AI researchers’ widely publicized claims that AI’s inner workings may soon be beyond our ability to understand them, Mark Zuckerberg’s mad dash to build data centers, and a detailed look at whether AI is in the middle of a big fat bubble.

Big tech’s deployment of AI has been both relentless and methodical, the study finds. By inserting AI features onto the top of messaging apps and social media, where it’s all but unignorable, by deploying pop-ups and unsubtle design tricks to direct users to AI on the interface, or by pushing prompts to use AI outright, AI is being imposed on billions of users, rather than eagerly adopted.

Even when it’s not obvious to the user, tech companies are often engaged in what the designers call a “manipulation of visual choice architecture” that tilts users towards AI products. As the authors point out, on many platforms, “AI-based features are discretely favored among others using UI/UX design.”

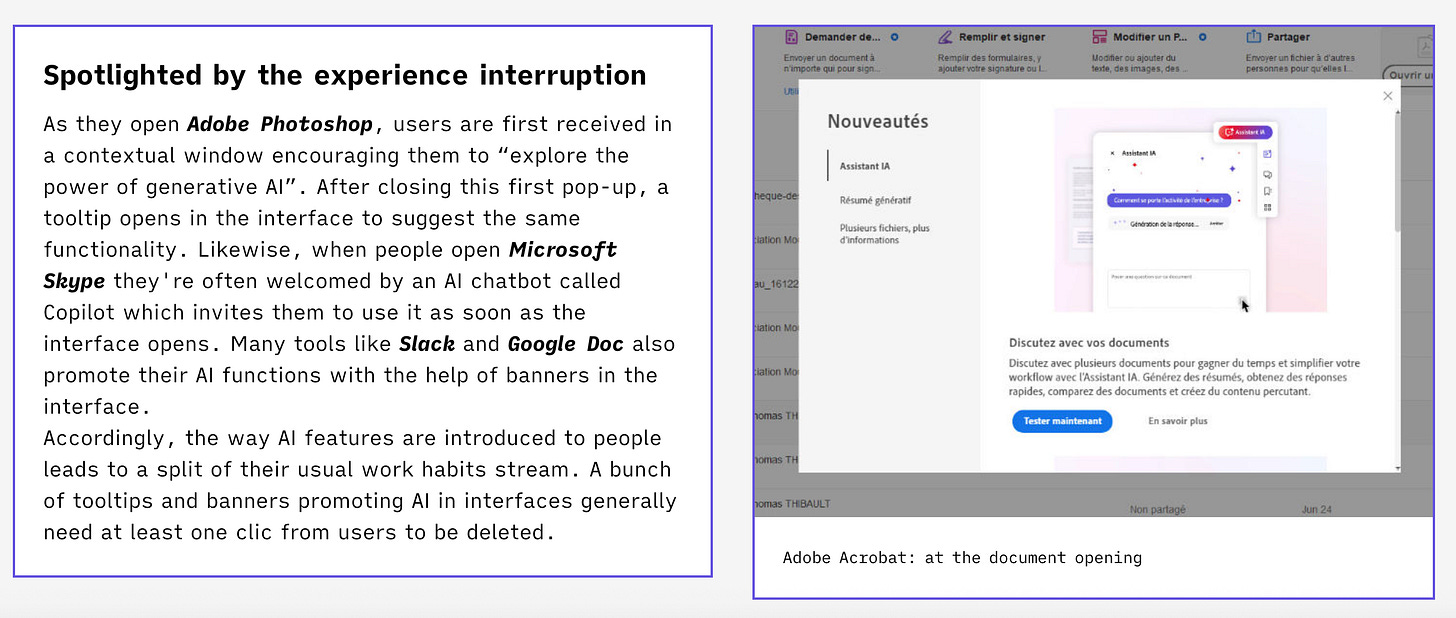

Calls to use AI products frequently interrupt regular use of software, whether through pop-ups, “tooltips,” or moving icons.

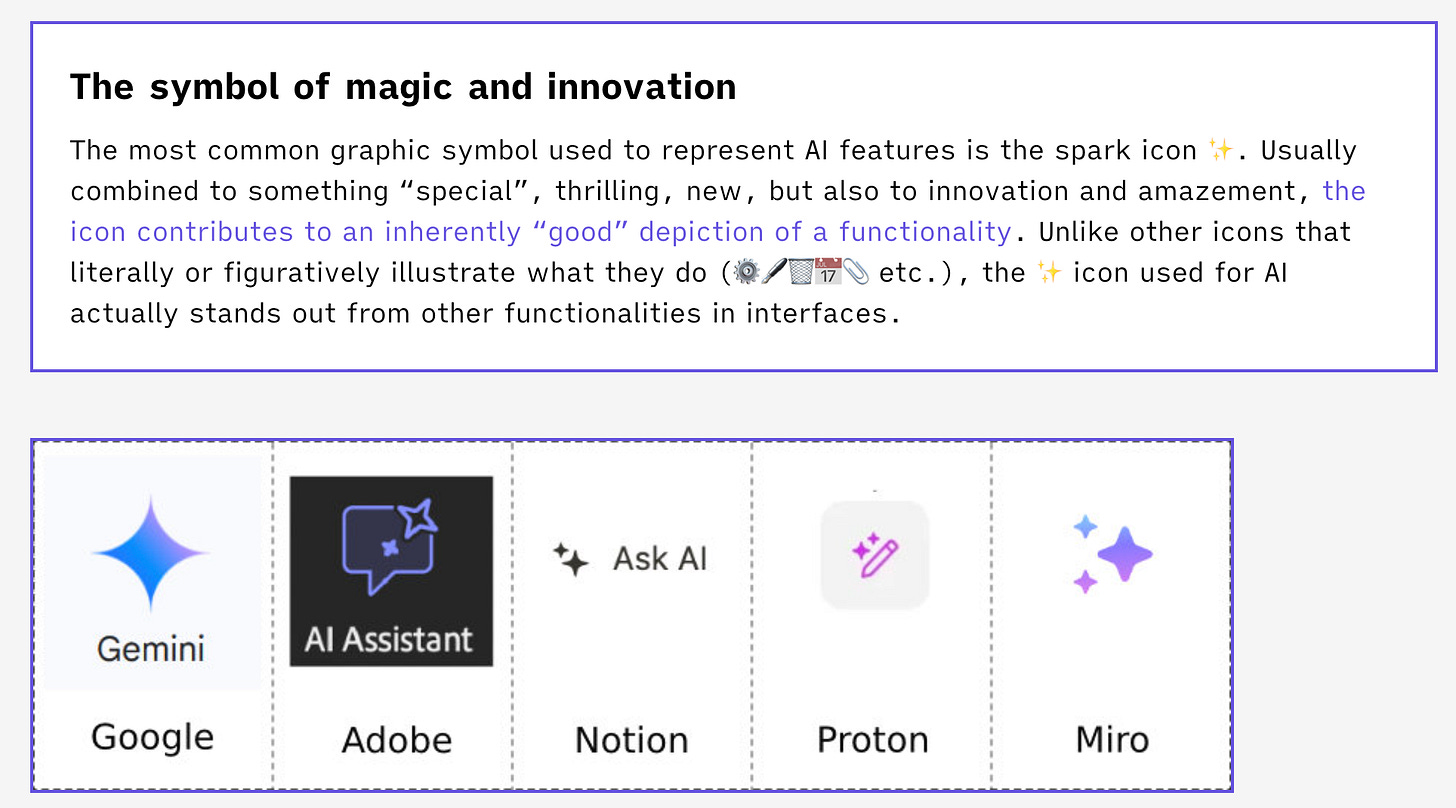

AI tools are given more dedicated buttons to encourage interaction, and are often colored the same, even across companies — mauve; a mellowed purple-blue tone that the design scholars say is meant to connote magic.

Taken alone, little of this will be shocking to anyone who has paid attention to the major tech platforms over the last few years. But seeing the litany of examples collected, one after another, I found that the study helped underline the extent to which billions of users have been force-fed AI products.

In many cases, it’s worth noting that these tactics, which lots of people find annoying and intrusive, are only successful because they’re being carried out by large or monopolistic companies with locked-in users and entrenched platforms. The study’s authors call this ‘forced use’ of AI, and it accounts for a large amount of AI use companies attribute to their products.

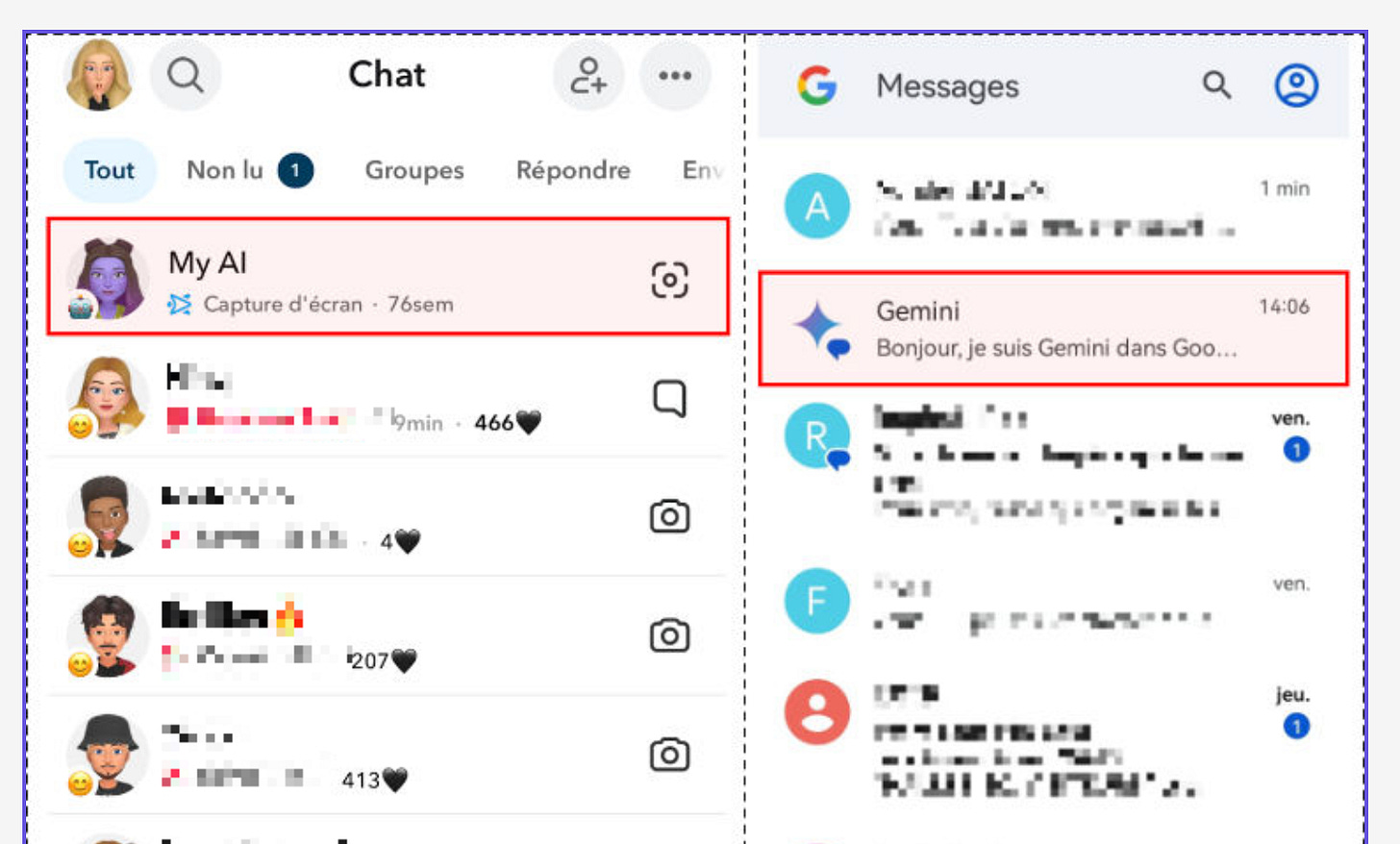

The most famous example is no doubt Google AI Overview, which serves users of the search engine utilized by 90% of Americans AI snippets, whether they asked for them or not. But there are many, many more, from SnapChat automatically loading its MyAI chatbot atop the contact lists of its hundreds of millions of users, to pop-ups exhorting users of Adobe Photoshop, the world’s most-used photo editing software, to “explore the power of generative AI.”

Some more details from the study:

-Visual prominence of AI features. AI features tend to take up a lot of space and are often given the most valuable space in interfaces, toolbars and menus. Linkedin’s interface, for example, features a messaging popover and a banner advertising an AI-based feature that occupy more than half of the space. In Notion, AI features take up nearly a third of the toolbar (Figure 4). In both Notion and DeepL, whenever users select words, a floating toolbar

AI features are privileged on the interface… On Snapchat the AI assistant, unlike all the other conversations, always remains on top and cannot be removed, regardless of whether it is actually used or not. While other conversations are gradually dropped down if they are never updated, AI assistants are not subject to the same treatment and limit the screen space dedicated to active conversations.

Interfering with non AI uses. We found that the ways AI features are pushed on interface users also interfere with the use of non-AI features and interrupt users’ usual workflows. Many tools advertise their AI features using banners in the interface, including Slack and Google Docs.

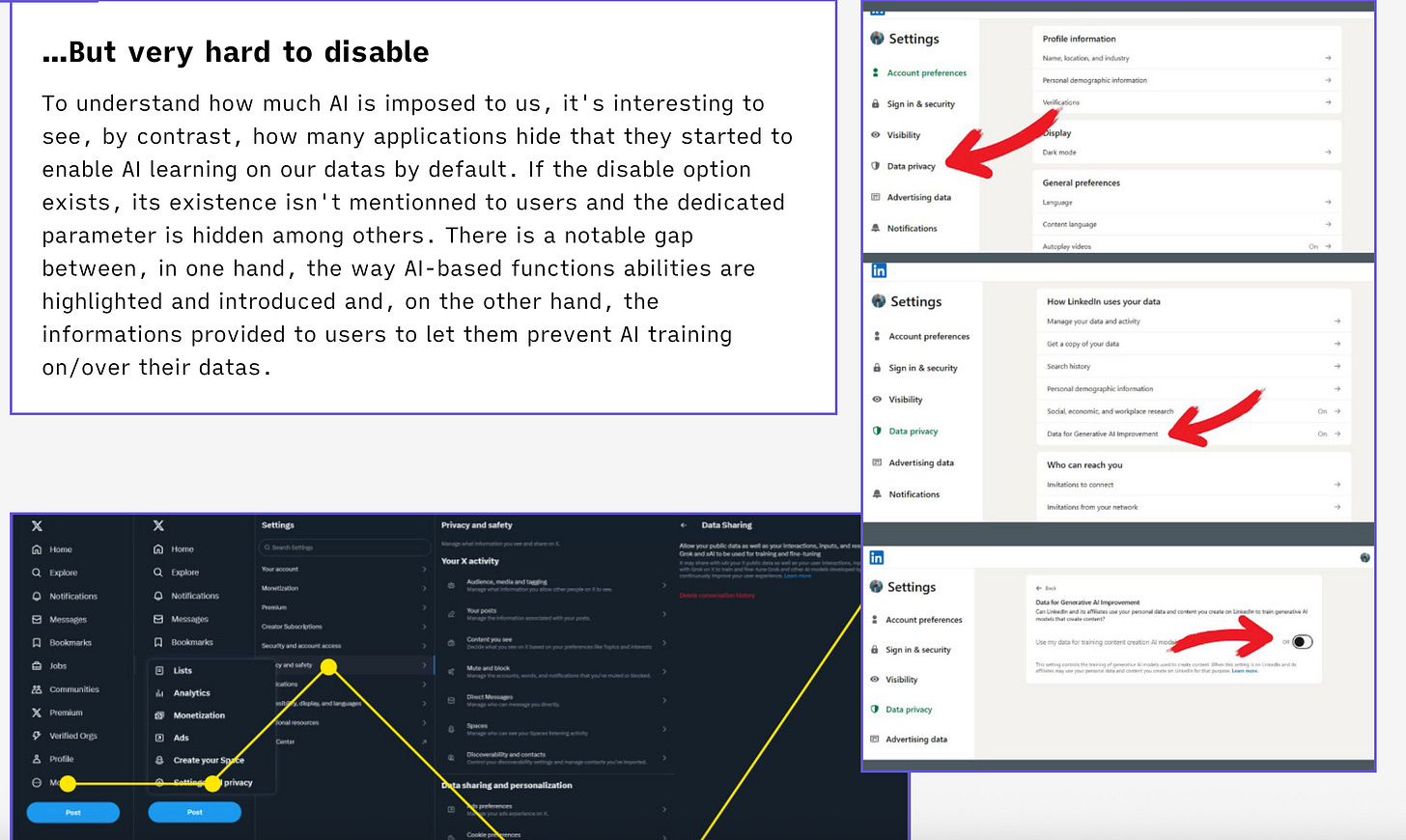

Imposing AI use by default. As we have seen, AI features are extremely easy to trigger, but they are also sometimes enabled by default or deployed before users are allowed to choose to deactivate them.

Magification of AI technicality. AI tends to be presented as a form of magic. Lupetti et al. for example, have shown how the magic metaphor can be used as a way to control the social meanings embedded in technology use

Silicon Valley’s preferred narrative is that the generative AI boom is what the people want; that it’s been driven by consumer demand and organic user interest. But the details of how AI features have been developed, deployed, and served to consumers on the biggest pieces of digital infrastructure paints a starkly different picture.

Yes, ChatGPT and competitors like Claude are popular; they’ve garnered tens of millions of regular users—but aside from chatbots, many other widely touted AI products are failing to generate much organic consumer interest. Agentic AI isn’t generating much heat. I’m not sure I’ve ever heard any lay users discuss intentionally using Gemini or seeking out Meta’s AI products. Overviews are widely reviled. As such, big tech has been pushed over the last two years to manufacture ubiquity; both with ad campaigns, unyielding hype cycles, and, as we’ve seen with built-in design features and product placement designed to force AI on users.

And we’re all the worse for it. It’s further enshittifying the internet, leaving vital services harder to use, and producing new pores through which the giants can vacuum up our data.

The Critical AI Report, July 22nd Edition

So a funny thing happened last week. Remember how, due to its opportunistic leadership and bizarre corporate structure, the very-for-profit OpenAI is technically controlled by a small nonprofit? Well that nonprofit apparently exists to do things like publish 40-page reports titled “Toward a People-First Future in The Age of Intelligence: Report of the OpenAI Nonprofit Commission,” which it did last week. I read some of it, and it’s pretty goofy stuff! Written in the style of a meandering Sam Altman blog post (seriously, I was borderline embarrassed for the authors reading this thing), it is loaded with loftily worded proclamations about needing to “Invest in the People Sector” whatever that means and going on about “democratization” just weeks after the company it is supposed to control drove a campaign to ban states from making laws regarding AI aka doing democracy.

Honestly get a load of this thing, here is a snippet:

“History will not memorialize aspirations. It will trace consequences.” Woof.

But the reason I am even aware of this thing at all is even funnier: The commission