Cognitive scientists and AI researchers make a forceful call to reject “uncritical adoption" of AI in academia

A new paper calls on academia to repel rampant AI in university departments and classrooms.

Greetings friends,

I know there’s been a lot of coverage in these pages of the dark side of commercial AI systems lately: Of how management is using AI software to drive down wages and deskill work, the psychological crises that AI chatbots are inflicting on vulnerable users, and, the failure of the courts to confront the monopoly power of Google, the biggest AI content distributor on the planet. To name a few.

But there are so many folks out there—scientists, workers, students, you name it—who are not content to let the future be determined by a handful of Silicon Valley giants alone, and who are pushing back in ways large and small. To wit: A new, just-published paper calls on academia to repel rampant AI adoption in their departments and classrooms.

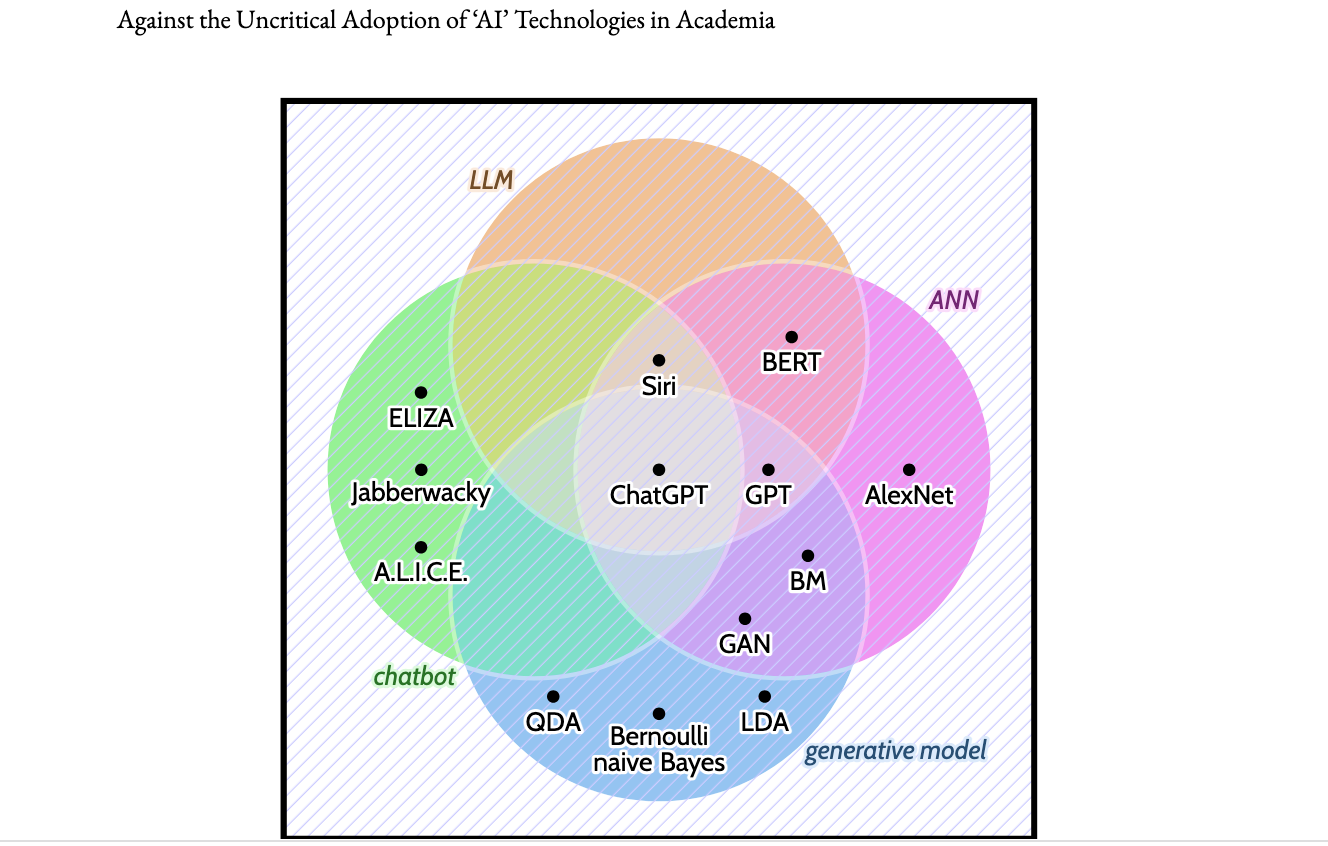

A group lead by cognitive scientists and AI researchers hailing from universities in the Netherlands, Denmark, Germany, and the US, has published a searing position paper urging educators and administrations to reject corporate AI products. The paper is called, fittingly, “Against the Uncritical Adoption of 'AI' Technologies in Academia,” and it makes an urgent and exhaustive case that universities should be doing a lot more to dispel tech industry hype and keep commercial AI tools out of the academy.

“It's the start of the academic year, so it's now or never,” Olivia Guest, an assistant professor of cognitive computational science at Radboud University, and the lead author of the paper, tells me. “We're already seeing students who are deskilled on some of the most basic academic skills, even in their final years.”

Indeed, preliminary research indicates that AI encourages cognitive offloading among students, and weakens retention and critical thinking skills.

The paper follows the publication in late June of an open letter to universities in the Netherlands, written by some of the same authors, and signed by over 1,100 academics, that took a “principled stand against the proliferation of so-called 'AI' technologies in universities.” The letter proclaimed that “we cannot condone the uncritical use of AI by students, faculty, or leadership.” It called for a reconsideration of the financial relationships between universities and AI companies, among other remedies.

The position paper, published September 5th, expands the argument and supports it with historical and academic research. It implores universities to cut through the hype, keep Silicon Valley AI products at a distance, and ensure students’ educational needs are foregrounded. Despite being an academic paper, it pulls few punches.

“When it comes to the AI technology industry, we refuse their frames, reject their addictive and brittle technology, and demand that the sanctity of the university both as an institution and a set of values be restored,” the authors write. “If we cannot even in principle be free from external manipulation and anti-scientific claims—and instead remain passive by default and welcome corrosive industry frames into our computer systems, our scientific literature, and our classrooms—then we have failed as scientists and as educators.”

See? It goes pretty hard.

“The position piece has the goal of shifting the discussion from the two stale positions of AI compatibilism, those who roll over and allow AI products to ruin our universities because they claim to know no other way, and AI enthusiasm, those who have drunk the the Kool-Aid, swallowed all technopositive rhetoric hook line and sinker, and behave outrageously and unreasonably towards any critical thought,” Guest tells me.

“To achieve this we perform a few discursive maneuvers,” she adds. “First, we unpick the technology industry’s marketing, hype, and harm. Second, we argue for safeguarding higher education, critical thinking, expertise, academic freedom, and scientific integrity. Finally, we also provide extensive further reading.”

Here’s the abstract for more detail:

Under the banner of progress, products have been uncritically adopted or even imposed on users—in past centuries with tobacco and combustion engines, and in the 21st with social media. For these collective blunders, we now regret our involvement or apathy as scientists, and society struggles to put the genie back in the bottle. Currently, we are similarly entangled with artificial intelligence (AI) technology.

For example, software updates are rolled out seamlessly and non-consensually, Microsoft Office is bundled with chatbots, and we, our students, and our employers have had no say, as it is not considered a valid position to reject AI technologies in our teaching and research… universities must take their role seriously to a) counter the technology industry’s marketing, hype, and harm; and to b) safeguard higher education, critical thinking, expertise, academic freedom, and scientific integrity.

It’s very much worth spending some time with, and not just because it cites yours truly (though I am honored to have Blood in the Machine: The book referenced a few times throughout). It’s an excellent resource for educators, administrators, and anyone concerned about AI in the classroom, really. And it’s a fine arrow in the quiver for those educators already eager to stand up to AI-happy administrations or department heads.

It also helps that these are scientists *working in AI labs and computer science departments*. Nothing against the comp lit and art history professors out there, whose views on the matter are just as valid, but the argument stands to carry more weight among administrations or departments navigating the question of whether or how to integrate AI into their schools this way. It might inspire AI researchers and cognitive scientists skeptical of the enormous industry presence in their field to speak out, too.

And it does feel like these calls are gaining in resonance and momentum—it follows the publication of “Refusing GenAI in Writing Studies: A Quickstart Guide” by three university professors in the US, “Against AI Literacy,” by the learning designer Miriam Reynoldson, and lengthy cases for fighting automation in the classroom by educators. After Silicon Valley’s drive to capture the classroom—and success in scoring some lucrative deals—perhaps the tide is beginning to turn.

Silicon Valley goes to Washington

This, of course, is what those educators are up against. The leading lights of Silicon Valley all sitting down with the same president who has effectively dismantled the Department of Education, to kiss his ring, and to do, well, whatever this is:

Pretty embarrassing!

Okay, that’s it for today. Thanks as always for reading. Remember, Blood in the Machine is a precarious, 100% reader supported publication. I can only do this work if readers like you chip in a few bucks each month, or $60 a year, and I appreciate each and every one of you. If you can, please consider helping me keep Silicon Valley accountable. Until next time.

Hey! I’m not sure if this is on your radar already but moderation workers at TikTok in London and Berlin are unionising as they’re losing their jobs under the guise of AI. I say guise because the roles are actually being moved to low paid, low employment rights countries like Kenya, where these workers are continuing to work to HIDE the inefficacy of the AI. https://www.instagram.com/reel/DOMECR_EsxU/?igsh=MXJmaGV3cHo5OTh2eQ==

Thank you so much for this newsletter. It was also nice to see the link the refusal piece from my own field of writing studies. I have been using your newsletter along with that of Gary Marcus as touchstones for teaching critical technology literacy in my classes -- which is thinking very carefully about how the technology (which is not value neutral) is shaping - or deforming -- your writing and thinking. You might find hope in that students have been very engaged. My sense is that, at least at my university, students are more skeptical about AI than they are being given credit for. Sadly our administration is really shoving AI at us, which is depressing to someone who firmly believes that "writing to learn," which situates the work of writing as critical to the learning process has been demonstrated to be real since the 80s.